In the following tutorial, we walk you through configuring Cypress to run tests in parallel with CircleCI.

Want to see the final project in action? Check out the video.

Contents

Project Setup

Let's start by setting up a basic Cypress project:

$ mkdir cypress-parallel && cd cypress-parallel

$ npm init -y

$ npm install cypress --save-dev

$ ./node_modules/.bin/cypress open

This creates a new project folder, adds a package.json file, installs Cypress, opens the Cypress GUI, and scaffolds out the following files and folders:

├── cypress

│ ├── fixtures

│ │ └── example.json

│ ├── integration

│ │ └── examples

│ │ ├── actions.spec.js

│ │ ├── aliasing.spec.js

│ │ ├── assertions.spec.js

│ │ ├── connectors.spec.js

│ │ ├── cookies.spec.js

│ │ ├── cypress_api.spec.js

│ │ ├── files.spec.js

│ │ ├── local_storage.spec.js

│ │ ├── location.spec.js

│ │ ├── misc.spec.js

│ │ ├── navigation.spec.js

│ │ ├── network_requests.spec.js

│ │ ├── querying.spec.js

│ │ ├── spies_stubs_clocks.spec.js

│ │ ├── traversal.spec.js

│ │ ├── utilities.spec.js

│ │ ├── viewport.spec.js

│ │ ├── waiting.spec.js

│ │ └── window.spec.js

│ ├── plugins

│ │ └── index.js

│ └── support

│ ├── commands.js

│ └── index.js

└─── cypress.json

Close the Cypress GUI. Then, remove the "cypress/integration/examples" folder and add four sample spec files:

sample1.spec.js

describe('Cypress parallel run example - 1', () => {

it('should display the title', () => {

cy.visit(`https://mherman.org`);

cy.get('a').contains('Michael Herman');

});

});

sample2.spec.js

describe('Cypress parallel run example - 2', () => {

it('should display the blog link', () => {

cy.visit(`https://mherman.org`);

cy.get('a').contains('Blog');

});

});

sample3.spec.js

describe('Cypress parallel run example - 3', () => {

it('should display the about link', () => {

cy.visit(`https://mherman.org`);

cy.get('a').contains('About');

});

});

sample4.spec.js

describe('Cypress parallel run example - 4', () => {

it('should display the rss link', () => {

cy.visit(`https://mherman.org`);

cy.get('a').contains('RSS');

});

});

Your project should now have the following structure:

├── cypress

│ ├── fixtures

│ │ └── example.json

│ ├── integration

│ │ ├── sample1.spec.js

│ │ ├── sample2.spec.js

│ │ ├── sample3.spec.js

│ │ └── sample4.spec.js

│ ├── plugins

│ │ └── index.js

│ └── support

│ ├── commands.js

│ └── index.js

├── cypress.json

├── package-lock.json

└── package.json

Make sure the tests pass before moving on:

$ ./node_modules/.bin/cypress run

Spec Tests Passing Failing Pending Skipped

┌────────────────────────────────────────────────────────────────────────────────────────────────┐

│ ✔ sample1.spec.js 00:02 1 1 - - - │

├────────────────────────────────────────────────────────────────────────────────────────────────┤

│ ✔ sample2.spec.js 00:01 1 1 - - - │

├────────────────────────────────────────────────────────────────────────────────────────────────┤

│ ✔ sample3.spec.js 00:02 1 1 - - - │

├────────────────────────────────────────────────────────────────────────────────────────────────┤

│ ✔ sample4.spec.js 00:01 1 1 - - - │

└────────────────────────────────────────────────────────────────────────────────────────────────┘

All specs passed! 00:08 4 4 - - -

Once done, add a .gitignore file:

node_modules/

cypress/videos/

cypress/screenshots/

Create a new repository on GitHub called cypress-parallel, init a new git repo locally, and then commit and push your code up to GitHub.

CircleCI Setup

Sign up for a CircleCI account if you don't already have one. Then, add cypress-parallel as a new project on CircleCI.

Review the Getting Started guide for info on how to set up and work with projects on CircleCI.

Add a new file to the folder called ".circleci", and then add a new file to that folder called config.yml:

version: 2

jobs:

build:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- checkout

- run: pwd

- run: ls

- restore_cache:

keys:

- 'v2-deps-{{ .Branch }}-{{ checksum "package-lock.json" }}'

- 'v2-deps-{{ .Branch }}-'

- v2-deps-

- run: npm ci

- save_cache:

key: 'v2-deps-{{ .Branch }}-{{ checksum "package-lock.json" }}'

paths:

- ~/.npm

- ~/.cache

- persist_to_workspace:

root: ~/

paths:

- .cache

- tmp

test:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests

command: $(npm bin)/cypress run

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

workflows:

version: 2

build_and_test:

jobs:

- build

- test:

requires:

- build

Here, we configured two jobs, build and test. The build job installs Cypress, and the tests are run in the test job. Both jobs run inside Docker and extend from the cypress/base image.

For more on CircleCI configuration, review the Configuration Introduction guide.

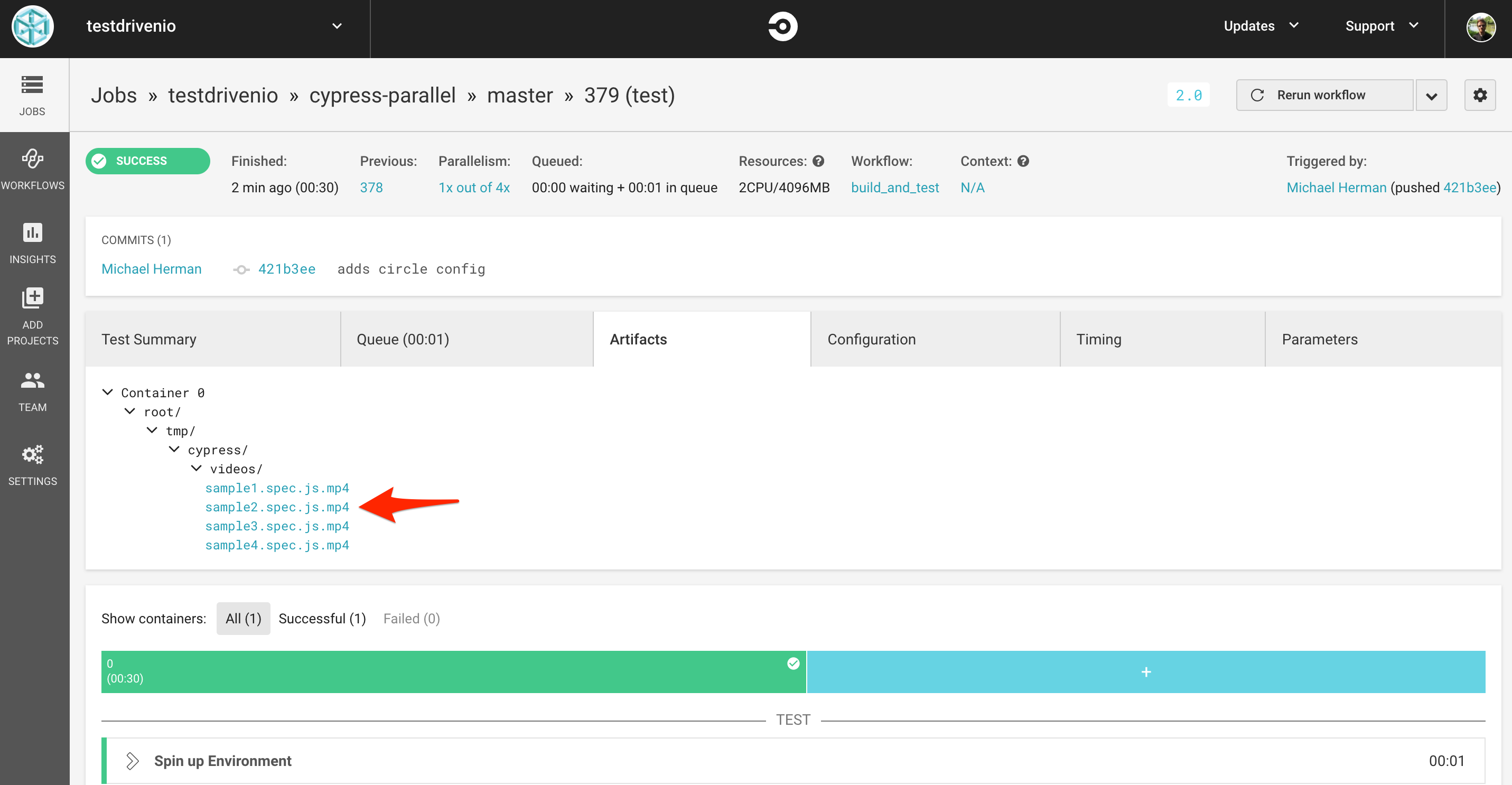

Commit and push your code to trigger a new build. Make sure both jobs pass. You should be able to see the Cypress recorded videos within the "Artifacts" tab on the test job:

With that, let's look at how to split the tests up using the config file, so the Cypress tests can be run in parallel.

Parallelism

We'll start by manually splitting them up. Update the config file like so:

version: 2

jobs:

build:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- checkout

- run: pwd

- run: ls

- restore_cache:

keys:

- 'v2-deps-{{ .Branch }}-{{ checksum "package-lock.json" }}'

- 'v2-deps-{{ .Branch }}-'

- v2-deps-

- run: npm ci

- save_cache:

key: 'v2-deps-{{ .Branch }}-{{ checksum "package-lock.json" }}'

paths:

- ~/.npm

- ~/.cache

- persist_to_workspace:

root: ~/

paths:

- .cache

- tmp

test1:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests 1

command: $(npm bin)/cypress run --spec cypress/integration/sample1.spec.js

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

test2:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests 2

command: $(npm bin)/cypress run --spec cypress/integration/sample2.spec.js

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

test3:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests 3

command: $(npm bin)/cypress run --spec cypress/integration/sample3.spec.js

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

test4:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests 4

command: $(npm bin)/cypress run --spec cypress/integration/sample4.spec.js

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

workflows:

version: 2

build_and_test:

jobs:

- build

- test1:

requires:

- build

- test2:

requires:

- build

- test3:

requires:

- build

- test4:

requires:

- build

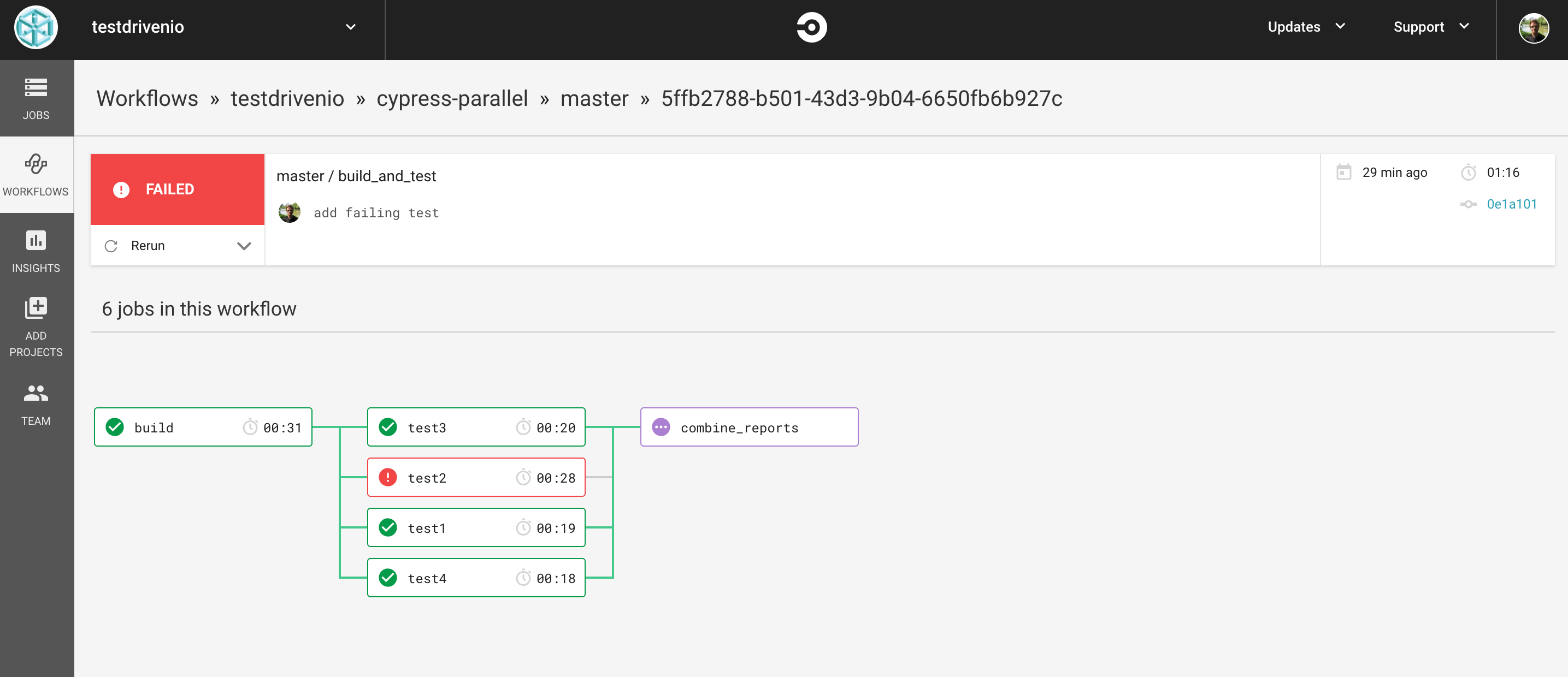

So, we created four test jobs, each will run a single spec file on a different machine on CircleCI. Commit your code and push it up to GitHub. This time, once the build job finishes, you should see each of the test jobs running at the same time:

Next, let's look at how to generate the config file dynamically.

Generate CircleCI Config

Create a "lib" folder in the project root, and then add the following files to that folder:

- circle.json

- generate-circle-config.js

Add the config for the build job to circle.json:

{

"version": 2,

"jobs": {

"build": {

"working_directory": "~/tmp",

"docker": [

{

"image": "cypress/base:10",

"environment": {

"TERM": "xterm"

}

}

],

"steps": [

"checkout",

{

"run": "pwd"

},

{

"run": "ls"

},

{

"restore_cache": {

"keys": [

"v2-deps-{{ .Branch }}-{{ checksum \"package-lock.json\" }}",

"v2-deps-{{ .Branch }}-",

"v2-deps-"

]

}

},

{

"run": "npm ci"

},

{

"save_cache": {

"key": "v2-deps-{{ .Branch }}-{{ checksum \"package-lock.json\" }}",

"paths": [

"~/.npm",

"~/.cache"

]

}

},

{

"persist_to_workspace": {

"root": "~/",

"paths": [

".cache",

"tmp"

]

}

}

]

}

},

"workflows": {

"version": 2,

"build_and_test": {

"jobs": [

"build"

]

}

}

}

Essentially, we'll use this config as the base, add the test jobs to it dynamically, and then save the final config file in YAML.

Add the code to generate-circle-config.js that:

- Gets the name of the spec files from the "cypress/integration" directory

- Reads the circle.json file as an object

- Adds the test jobs to the object

- Converts the object to YAML and writes it to disc as .circleci/config.yml

Code:

const path = require('path');

const fs = require('fs');

const yaml = require('write-yaml');

/*

helpers

*/

function createJSON(fileArray, data) {

for (const [index, value] of fileArray.entries()) {

data.jobs[`test${index + 1}`] = {

working_directory: '~/tmp',

docker: [

{

image: 'cypress/base:10',

environment: {

TERM: 'xterm',

},

},

],

steps: [

{

attach_workspace: {

at: '~/',

},

},

{

run: 'ls -la cypress',

},

{

run: 'ls -la cypress/integration',

},

{

run: {

name: `Running cypress tests ${index + 1}`,

command: `$(npm bin)/cypress run --spec cypress/integration/${value}`,

},

},

{

store_artifacts: {

path: 'cypress/videos',

},

},

{

store_artifacts: {

path: 'cypress/screenshots',

},

},

],

};

data.workflows.build_and_test.jobs.push({

[`test${index + 1}`]: {

requires: [

'build',

],

},

});

}

return data;

}

function writeFile(data) {

yaml(path.join(__dirname, '..', '.circleci', 'config.yml'), data, (err) => {

if (err) {

console.log(err);

} else {

console.log('Success!');

}

});

}

/*

main

*/

// get spec files as an array

const files = fs.readdirSync(path.join(__dirname, '..', 'cypress', 'integration')).filter(fn => fn.endsWith('.spec.js'));

// read circle.json

const circleConfigJSON = require(path.join(__dirname, 'circle.json'));

// add cypress specs to object as test jobs

const data = createJSON(files, circleConfigJSON);

// write file to disc

writeFile(data);

Review (and refactor) this on your own.

Install write-yaml and then generate the new config file:

$ npm install write-yaml --save-dev

$ node lib/generate-circle-config.js

Commit your code again and push it up to GitHub to trigger a new build. Again, four test jobs should run in parallel after the build job finishes.

Mochawesome

Moving along, let's add mochawesome as a Cypress custom reporter so we can generate a nice report after all test jobs finish running.

Install:

$ npm install mochawesome mocha --save-dev

Update the following run step in the createJSON function in generate-circle-config.js:

run: {

name: `Running cypress tests ${index + 1}`,

command: `$(npm bin)/cypress run --spec cypress/integration/${value} --reporter mochawesome --reporter-options "reportFilename=test${index + 1}"`,

},

Then, add a new step to store the generated report as an artifact to createJSON:

{

store_artifacts: {

path: 'mochawesome-report',

},

},

createJSON should now look like:

function createJSON(fileArray, data) {

for (const [index, value] of fileArray.entries()) {

data.jobs[`test${index + 1}`] = {

working_directory: '~/tmp',

docker: [

{

image: 'cypress/base:10',

environment: {

TERM: 'xterm',

},

},

],

steps: [

{

attach_workspace: {

at: '~/',

},

},

{

run: 'ls -la cypress',

},

{

run: 'ls -la cypress/integration',

},

{

run: {

name: `Running cypress tests ${index + 1}`,

command: `$(npm bin)/cypress run --spec cypress/integration/${value} --reporter mochawesome --reporter-options "reportFilename=test${index + 1}"`,

},

},

{

store_artifacts: {

path: 'cypress/videos',

},

},

{

store_artifacts: {

path: 'cypress/screenshots',

},

},

{

store_artifacts: {

path: 'mochawesome-report',

},

},

],

};

data.workflows.build_and_test.jobs.push({

[`test${index + 1}`]: {

requires: [

'build',

],

},

});

}

return data;

}

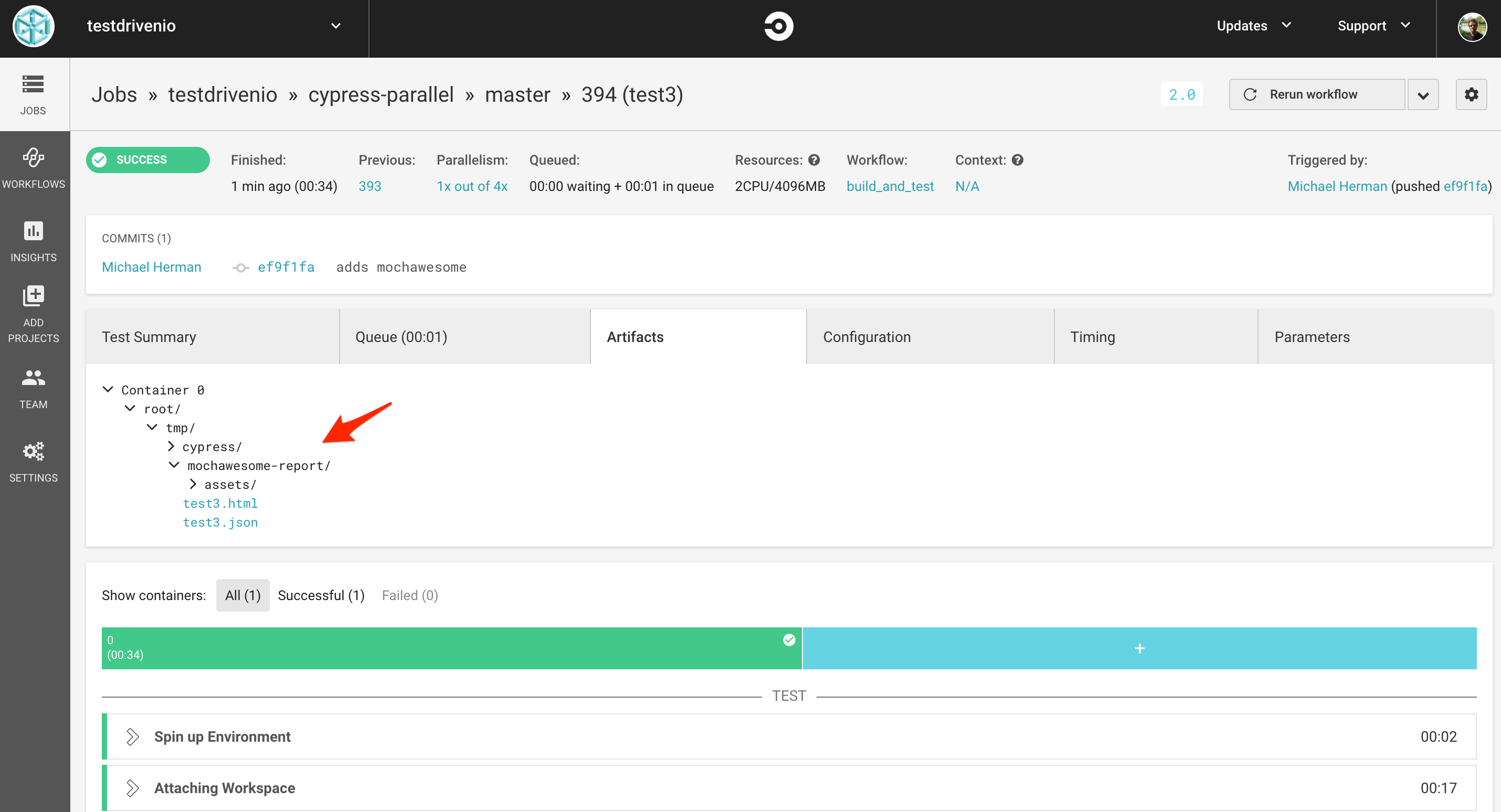

Now, each test run will generate a mochawesome report with a unique name. Try it out. Generate the new config. Commit and push your code. Each test job should store a copy of the generated mochawesome report in the "Artifacts" tab:

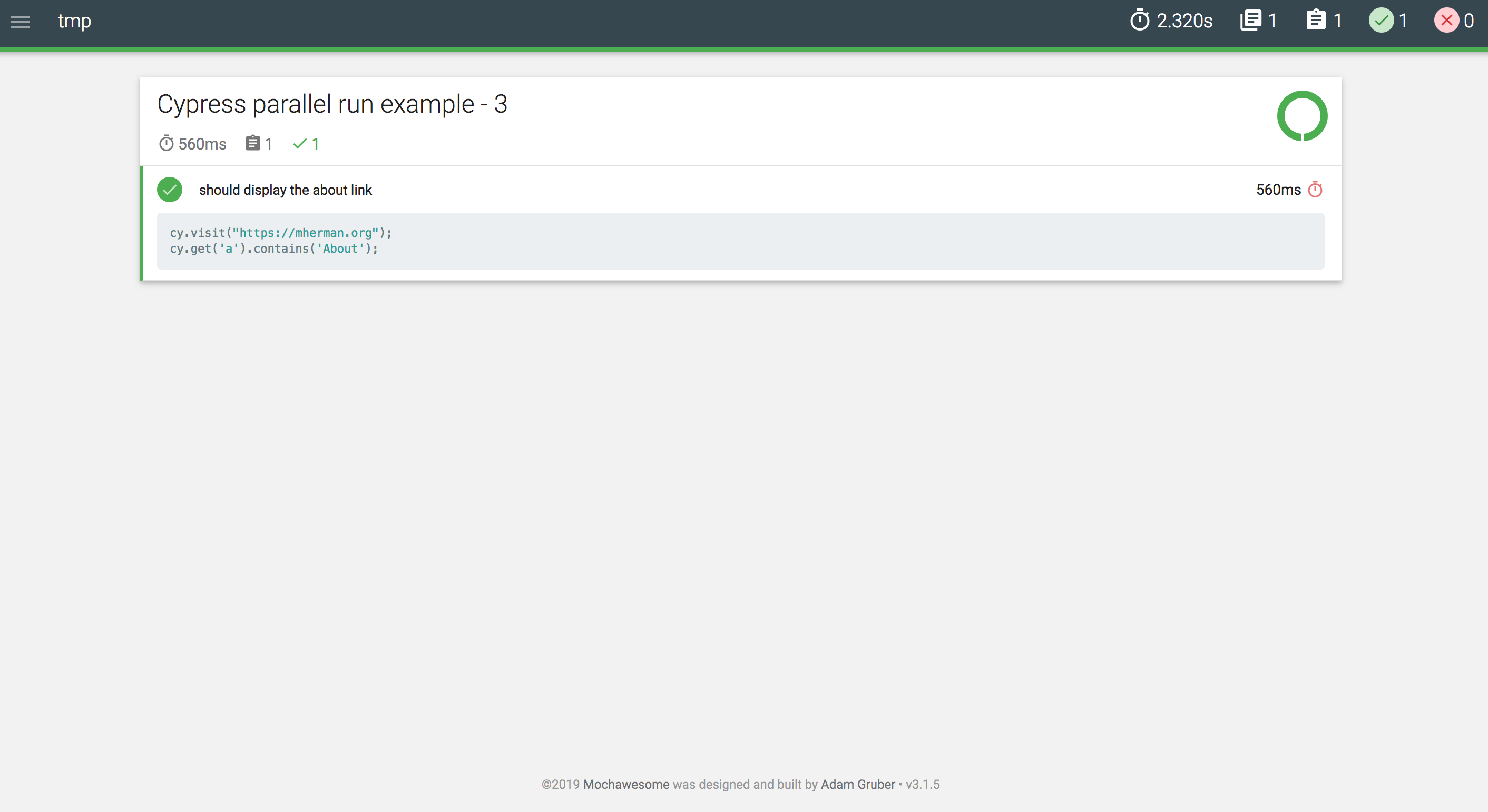

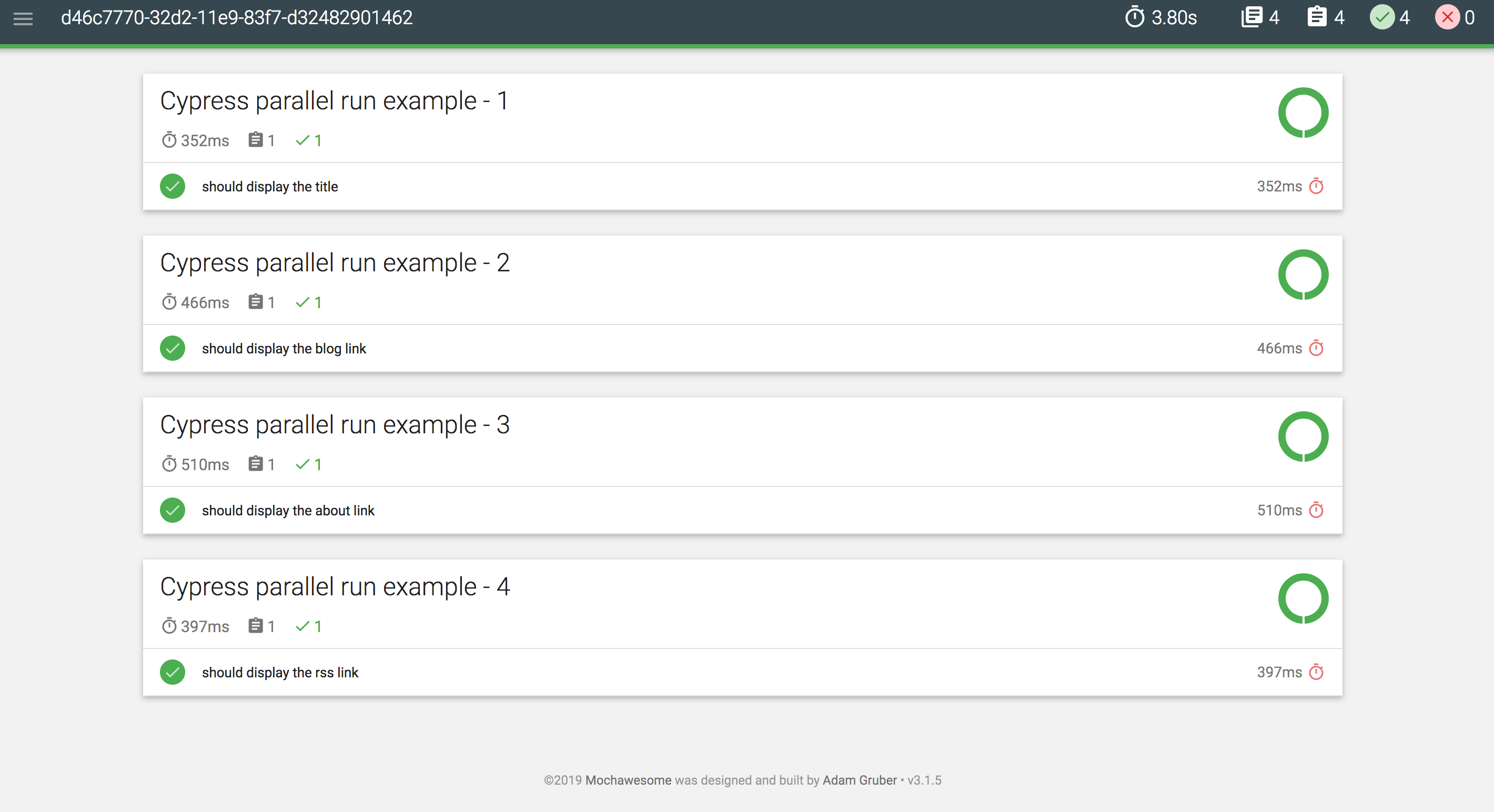

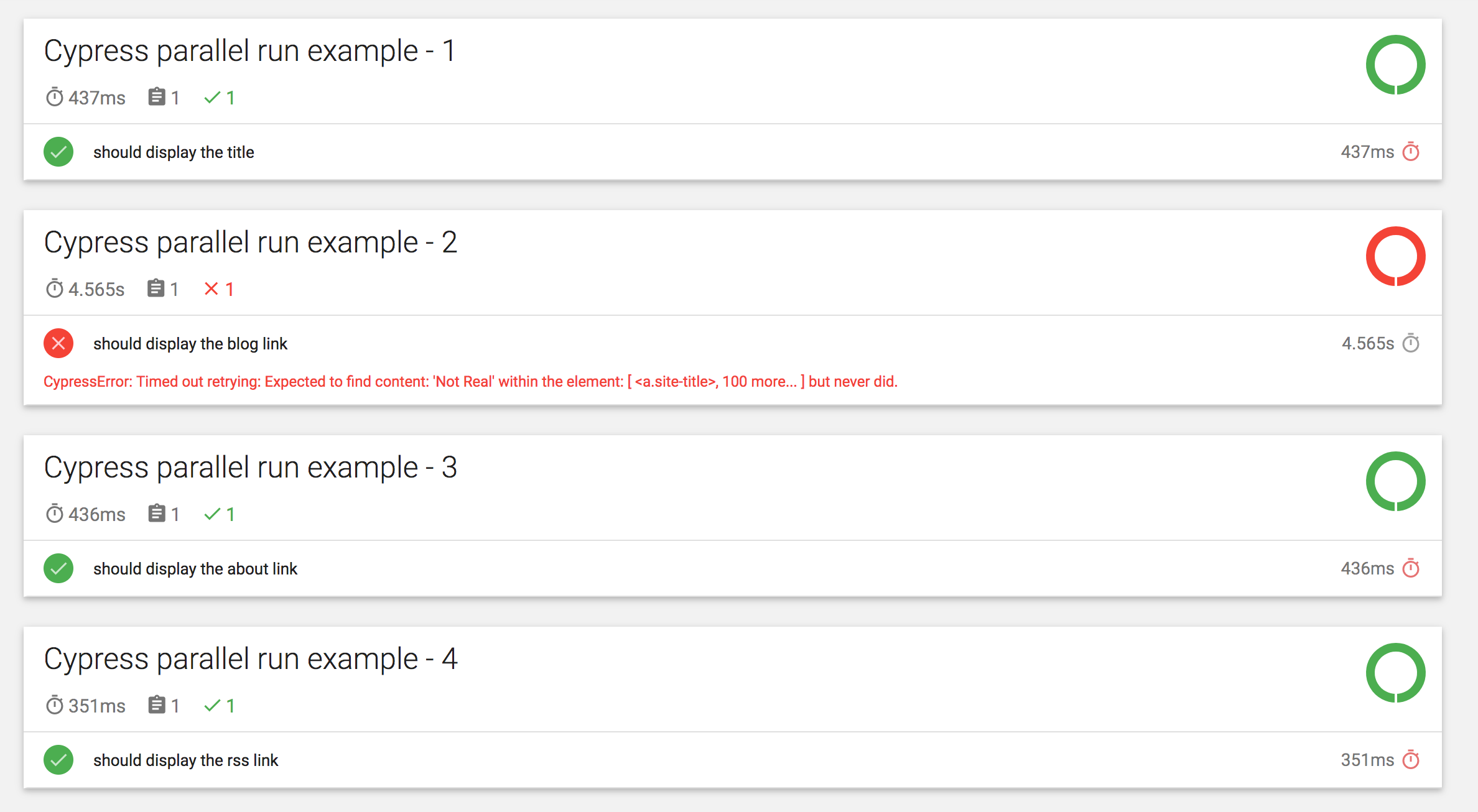

The actual report should look something like:

Combine Reports

The next step is to combine the separate reports into a single report. Start by adding a new step to store the generated report in a workspace to the createJSON function:

{

persist_to_workspace: {

root: 'mochawesome-report',

paths: [

`test${index + 1}.json`,

`test${index + 1}.html`,

],

},

},

Also, add a new job to lib/circle.json called combine_reports, which attaches the workspace and then runs an ls command to display the contents of the directory:

"combine_reports": {

"working_directory": "~/tmp",

"docker": [

{

"image": "cypress/base:10",

"environment": {

"TERM": "xterm"

}

}

],

"steps": [

{

"attach_workspace": {

"at": "/tmp/mochawesome-report"

}

},

{

"run": "ls /tmp/mochawesome-report"

}

]

}

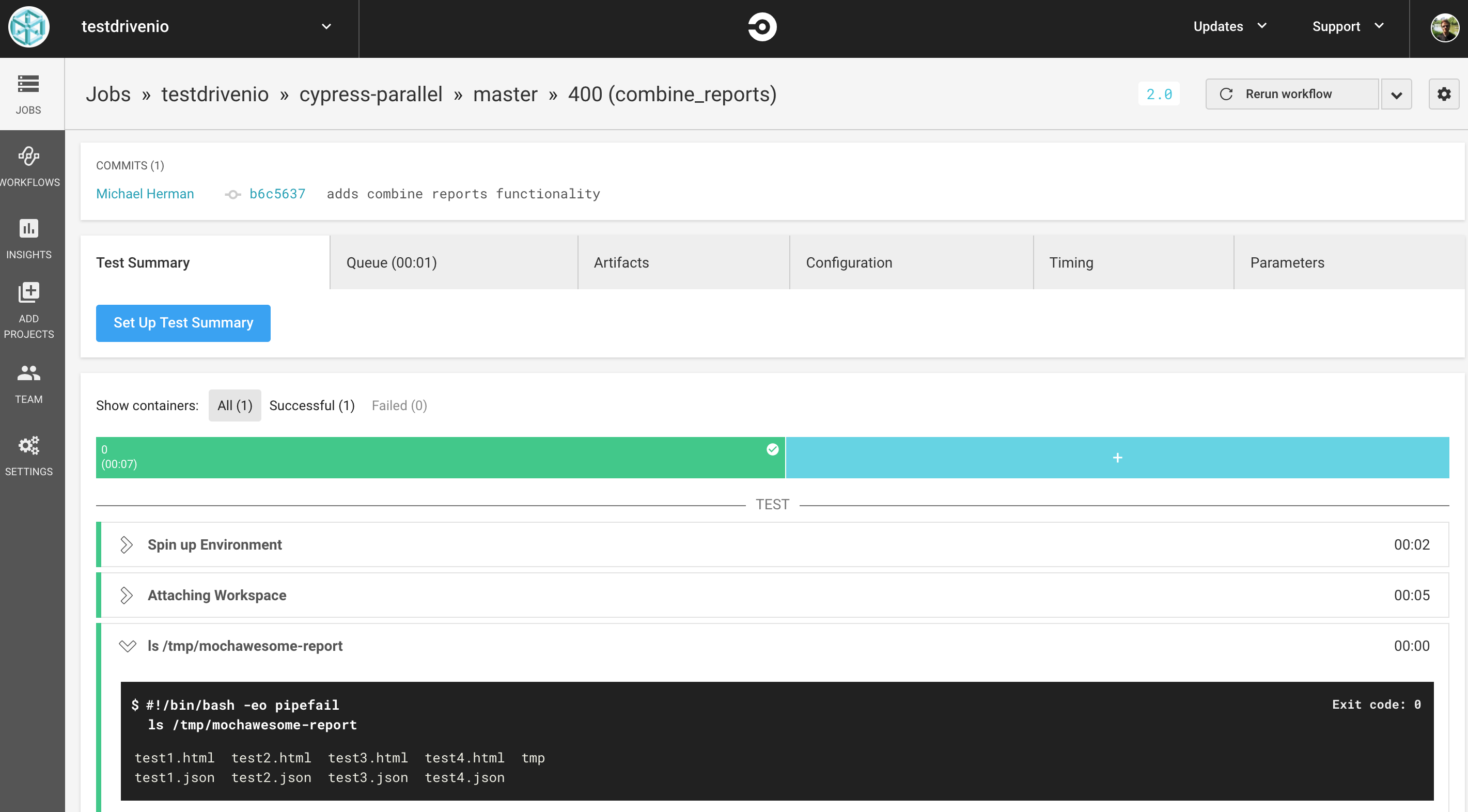

The purpose of the

lsis to just make sure that we are persisting and attaching the workspace correctly. In other words, when run, you should see all the reports in the "/tmp/mochawesome-report" directory.

Since this job depends on the test jobs, update createJSON again, like so:

function createJSON(fileArray, data) {

const jobs = [];

for (const [index, value] of fileArray.entries()) {

jobs.push(`test${index + 1}`);

data.jobs[`test${index + 1}`] = {

working_directory: '~/tmp',

docker: [

{

image: 'cypress/base:10',

environment: {

TERM: 'xterm',

},

},

],

steps: [

{

attach_workspace: {

at: '~/',

},

},

{

run: 'ls -la cypress',

},

{

run: 'ls -la cypress/integration',

},

{

run: {

name: `Running cypress tests ${index + 1}`,

command: `$(npm bin)/cypress run --spec cypress/integration/${value} --reporter mochawesome --reporter-options "reportFilename=test${index + 1}"`,

},

},

{

store_artifacts: {

path: 'cypress/videos',

},

},

{

store_artifacts: {

path: 'cypress/screenshots',

},

},

{

store_artifacts: {

path: 'mochawesome-report',

},

},

{

persist_to_workspace: {

root: 'mochawesome-report',

paths: [

`test${index + 1}.json`,

`test${index + 1}.html`,

],

},

},

],

};

data.workflows.build_and_test.jobs.push({

[`test${index + 1}`]: {

requires: [

'build',

],

},

});

}

data.workflows.build_and_test.jobs.push({

combine_reports: {

'requires': jobs,

},

});

return data;

}

Generate the config:

$ node lib/generate-circle-config.js

The config file should now look like:

version: 2

jobs:

build:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- checkout

- run: pwd

- run: ls

- restore_cache:

keys:

- 'v2-deps-{{ .Branch }}-{{ checksum "package-lock.json" }}'

- 'v2-deps-{{ .Branch }}-'

- v2-deps-

- run: npm ci

- save_cache:

key: 'v2-deps-{{ .Branch }}-{{ checksum "package-lock.json" }}'

paths:

- ~/.npm

- ~/.cache

- persist_to_workspace:

root: ~/

paths:

- .cache

- tmp

combine_reports:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: /tmp/mochawesome-report

- run: ls /tmp/mochawesome-report

test1:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests 1

command: >-

$(npm bin)/cypress run --spec cypress/integration/sample1.spec.js

--reporter mochawesome --reporter-options "reportFilename=test1"

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

- store_artifacts:

path: mochawesome-report

- persist_to_workspace:

root: mochawesome-report

paths:

- test1.json

- test1.html

test2:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests 2

command: >-

$(npm bin)/cypress run --spec cypress/integration/sample2.spec.js

--reporter mochawesome --reporter-options "reportFilename=test2"

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

- store_artifacts:

path: mochawesome-report

- persist_to_workspace:

root: mochawesome-report

paths:

- test2.json

- test2.html

test3:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests 3

command: >-

$(npm bin)/cypress run --spec cypress/integration/sample3.spec.js

--reporter mochawesome --reporter-options "reportFilename=test3"

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

- store_artifacts:

path: mochawesome-report

- persist_to_workspace:

root: mochawesome-report

paths:

- test3.json

- test3.html

test4:

working_directory: ~/tmp

docker:

- image: 'cypress/base:10'

environment:

TERM: xterm

steps:

- attach_workspace:

at: ~/

- run: ls -la cypress

- run: ls -la cypress/integration

- run:

name: Running cypress tests 4

command: >-

$(npm bin)/cypress run --spec cypress/integration/sample4.spec.js

--reporter mochawesome --reporter-options "reportFilename=test4"

- store_artifacts:

path: cypress/videos

- store_artifacts:

path: cypress/screenshots

- store_artifacts:

path: mochawesome-report

- persist_to_workspace:

root: mochawesome-report

paths:

- test4.json

- test4.html

workflows:

version: 2

build_and_test:

jobs:

- build

- test1:

requires:

- build

- test2:

requires:

- build

- test3:

requires:

- build

- test4:

requires:

- build

- combine_reports:

requires:

- test1

- test2

- test3

- test4

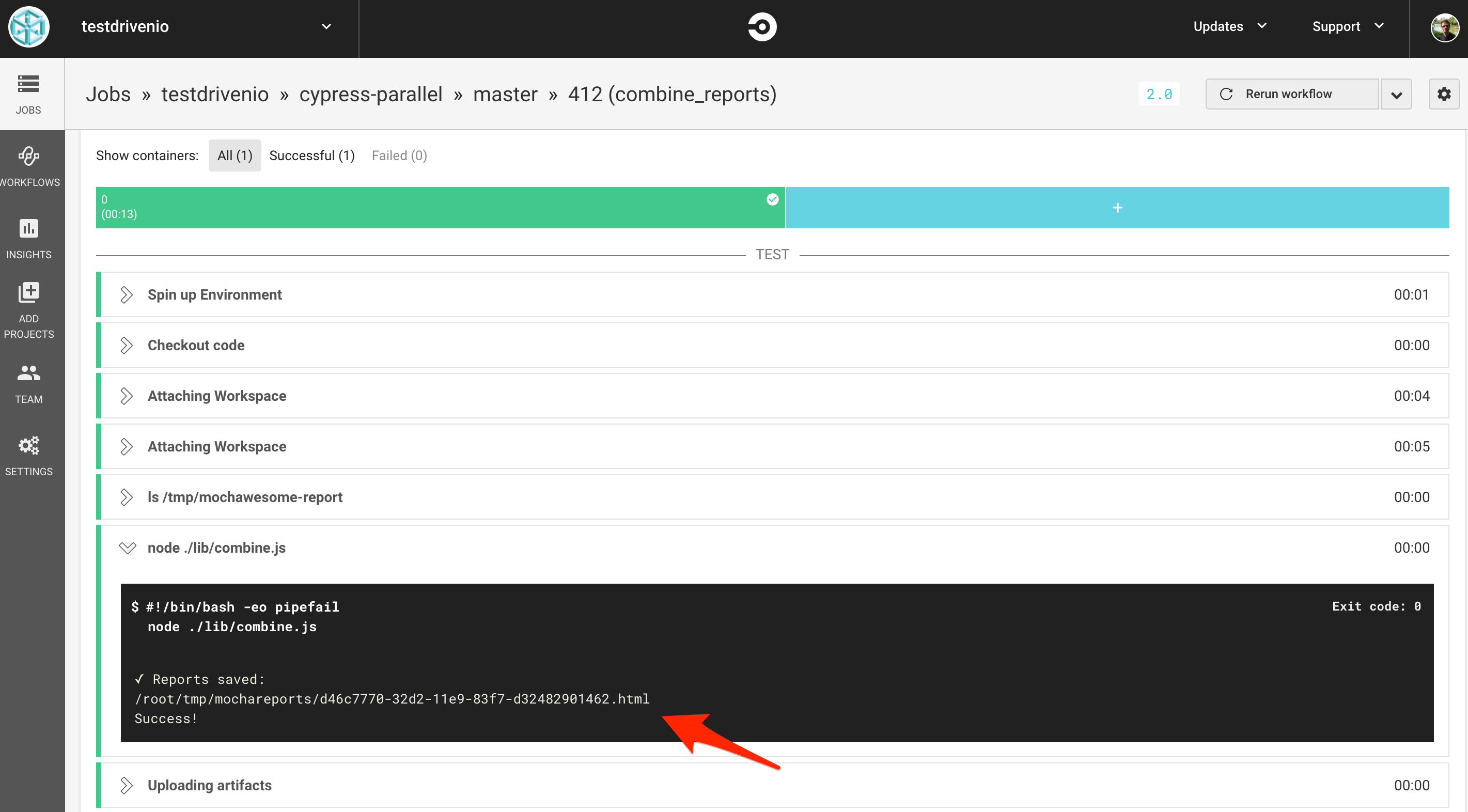

Commit and push to GitHub again. Make sure combine_reports runs at the end:

Next, add a script to combine the reports:

const fs = require('fs');

const path = require('path');

const shell = require('shelljs');

const uuidv1 = require('uuid/v1');

function getFiles(dir, ext, fileList = []) {

const files = fs.readdirSync(dir);

files.forEach((file) => {

const filePath = `${dir}/${file}`;

if (fs.statSync(filePath).isDirectory()) {

getFiles(filePath, fileList);

} else if (path.extname(file) === ext) {

fileList.push(filePath);

}

});

return fileList;

}

function traverseAndModifyTimedOut(target, deep) {

if (target['tests'] && target['tests'].length) {

target['tests'].forEach(test => {

test.timedOut = false;

});

}

if (target['suites']) {

target['suites'].forEach(suite => {

traverseAndModifyTimedOut(suite, deep + 1);

})

}

}

function combineMochaAwesomeReports() {

const reportDir = path.join('/', 'tmp', 'mochawesome-report');

const reports = getFiles(reportDir, '.json', []);

const suites = [];

let totalSuites = 0;

let totalTests = 0;

let totalPasses = 0;

let totalFailures = 0;

let totalPending = 0;

let startTime;

let endTime;

let totalskipped = 0;

reports.forEach((report, idx) => {

const rawdata = fs.readFileSync(report);

const parsedData = JSON.parse(rawdata);

if (idx === 0) { startTime = parsedData.stats.start; }

if (idx === (reports.length - 1)) { endTime = parsedData.stats.end; }

totalSuites += parseInt(parsedData.stats.suites, 10);

totalskipped += parseInt(parsedData.stats.skipped, 10);

totalPasses += parseInt(parsedData.stats.passes, 10);

totalFailures += parseInt(parsedData.stats.failures, 10);

totalPending += parseInt(parsedData.stats.pending, 10);

totalTests += parseInt(parsedData.stats.tests, 10);

if (parsedData && parsedData.suites && parsedData.suites.suites) {

parsedData.suites.suites.forEach(suite => {

suites.push(suite)

})

}

});

return {

totalSuites,

totalTests,

totalPasses,

totalFailures,

totalPending,

startTime,

endTime,

totalskipped,

suites,

};

}

function getPercentClass(pct) {

if (pct <= 50) {

return 'danger';

} else if (pct > 50 && pct < 80) {

return 'warning';

}

return 'success';

}

function writeReport(obj, uuid) {

const sampleFile = path.join(__dirname, 'sample.json');

const outFile = path.join(__dirname, '..', `${uuid}.json`);

fs.readFile(sampleFile, 'utf8', (err, data) => {

if (err) throw err;

const parsedSampleFile = JSON.parse(data);

const stats = parsedSampleFile.stats;

stats.suites = obj.totalSuites;

stats.tests = obj.totalTests;

stats.passes = obj.totalPasses;

stats.failures = obj.totalFailures;

stats.pending = obj.totalPending;

stats.start = obj.startTime;

stats.end = obj.endTime;

stats.duration = new Date(obj.endTime) - new Date(obj.startTime);

stats.testsRegistered = obj.totalTests - obj.totalPending;

stats.passPercent = Math.round((stats.passes / (stats.tests - stats.pending)) * 1000) / 10;

stats.pendingPercent = Math.round((stats.pending / stats.testsRegistered) * 1000) /10;

stats.skipped = obj.totalskipped;

stats.hasSkipped = obj.totalskipped > 0;

stats.passPercentClass = getPercentClass(stats.passPercent);

stats.pendingPercentClass = getPercentClass(stats.pendingPercent);

obj.suites.forEach(suit => {

traverseAndModifyTimedOut(suit, 0);

});

parsedSampleFile.suites.suites = obj.suites;

parsedSampleFile.suites.uuid = uuid;

fs.writeFile(outFile, JSON.stringify(parsedSampleFile), { flag: 'wx' }, (error) => {

if (error) throw error;

});

});

}

const data = combineMochaAwesomeReports();

const uuid = uuidv1();

writeReport(data, uuid);

shell.exec(`./node_modules/.bin/marge ${uuid}.json --reportDir mochareports --reportTitle ${uuid}`, (code, stdout, stderr) => {

if (stderr) {

console.log(stderr);

} else {

console.log('Success!');

}

});

Save this as combine.js in "lib".

This script will gather up all the mochawesome JSON files (which contain the raw JSON output for each mochawesome report), combine them, and generate a new mochawesome report.

If interested, hop back to CircleCI to view one of the generated mochawesome JSON files in the "Artifacts" tab from one of the test jobs.

Install the dependencies:

$ npm install shelljs uuid --save-dev

Add sample.json to the "lib" directory:

{

"stats": {

"suites": 0,

"tests": 0,

"passes": 0,

"pending": 0,

"failures": 0,

"start": "",

"end": "",

"duration": 0,

"testsRegistered": 0,

"passPercent": 0,

"pendingPercent": 0,

"other": 0,

"hasOther": false,

"skipped": 0,

"hasSkipped": false,

"passPercentClass": "success",

"pendingPercentClass": "success"

},

"suites": {

"uuid": "",

"title": "",

"fullFile": "",

"file": "",

"beforeHooks": [],

"afterHooks": [],

"tests": [],

"suites": [],

"passes": [],

"failures": [],

"pending": [],

"skipped": [],

"duration": 0,

"root": true,

"rootEmpty": true,

"_timeout": 2000

},

"copyrightYear": 2019

}

Update combine_reports in circle.json to run the combine.js script and then save the new reports as an artifact:

"combine_reports": {

"working_directory": "~/tmp",

"docker": [

{

"image": "cypress/base:10",

"environment": {

"TERM": "xterm"

}

}

],

"steps": [

"checkout",

{

"attach_workspace": {

"at": "~/"

}

},

{

"attach_workspace": {

"at": "/tmp/mochawesome-report"

}

},

{

"run": "ls /tmp/mochawesome-report"

},

{

"run": "node ./lib/combine.js"

},

{

"store_artifacts": {

"path": "mochareports"

}

}

]

}

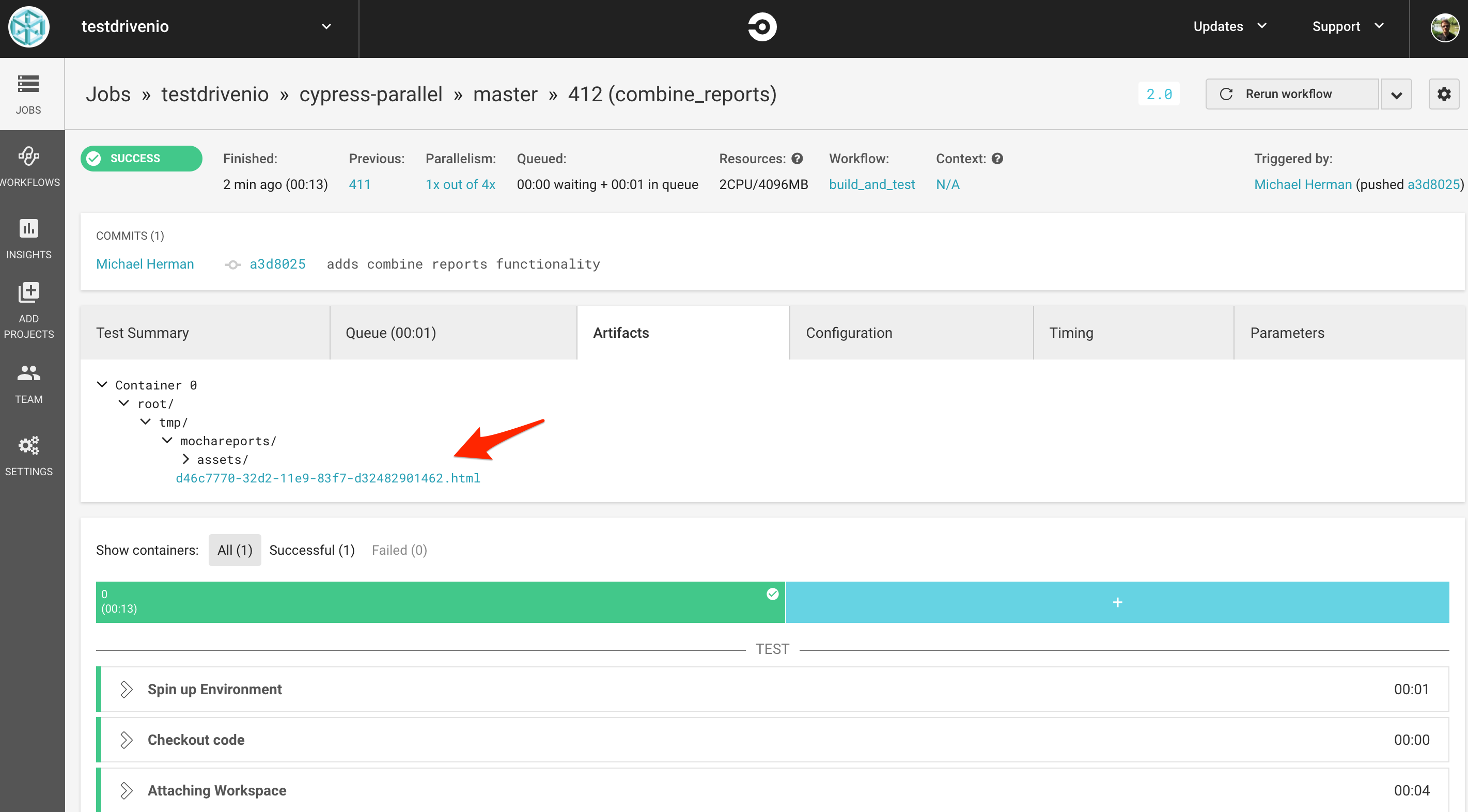

To test, generate the new config, commit, and push your code. All jobs should pass and you should see the combined final report.

Handle Test Failures

What happens if a test fails?

Change cy.get('a').contains('Blog'); to cy.get('a').contains('Not Real'); in sample2.spec.js:

describe('Cypress parallel run example - 2', () => {

it('should display the blog link', () => {

cy.visit(`https://mherman.org`);

cy.get('a').contains('Not Real');

});

});

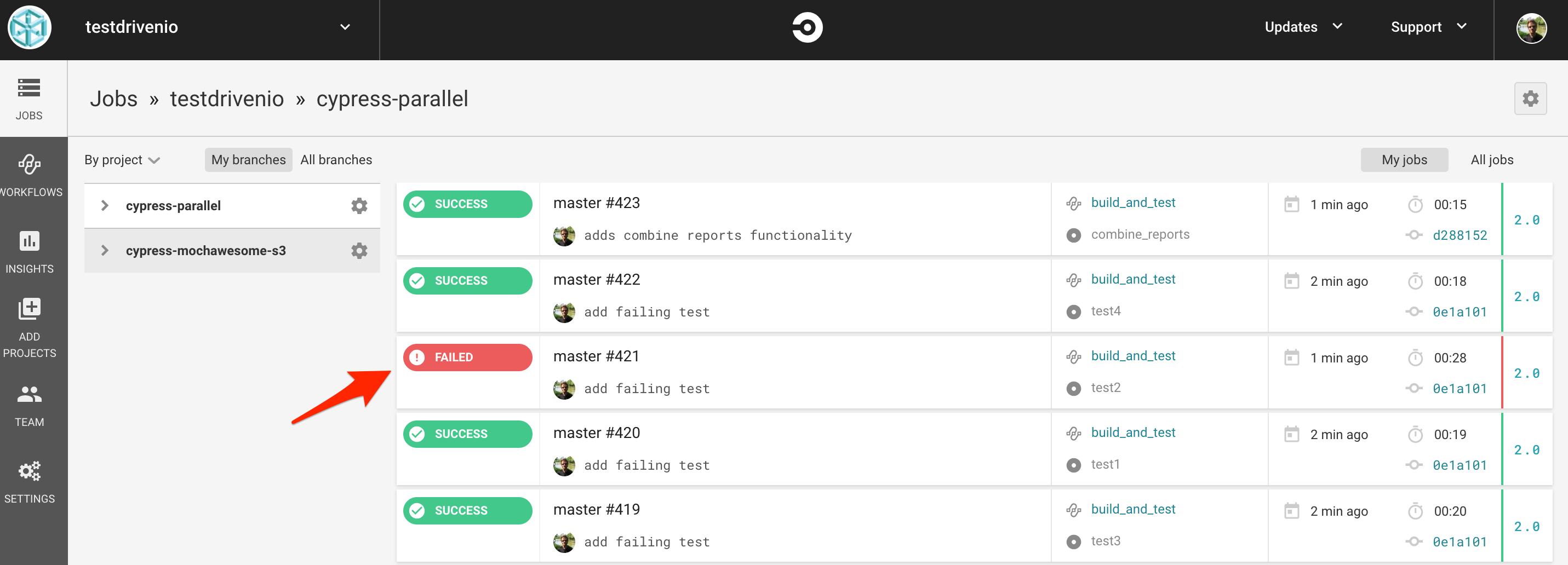

Commit and push your code. Since the combine_reports job is dependent on the test jobs, if any one of those test jobs fail it won't run.

So, how do you get the combine_reports job to run even if a previous job in the workflow fails?

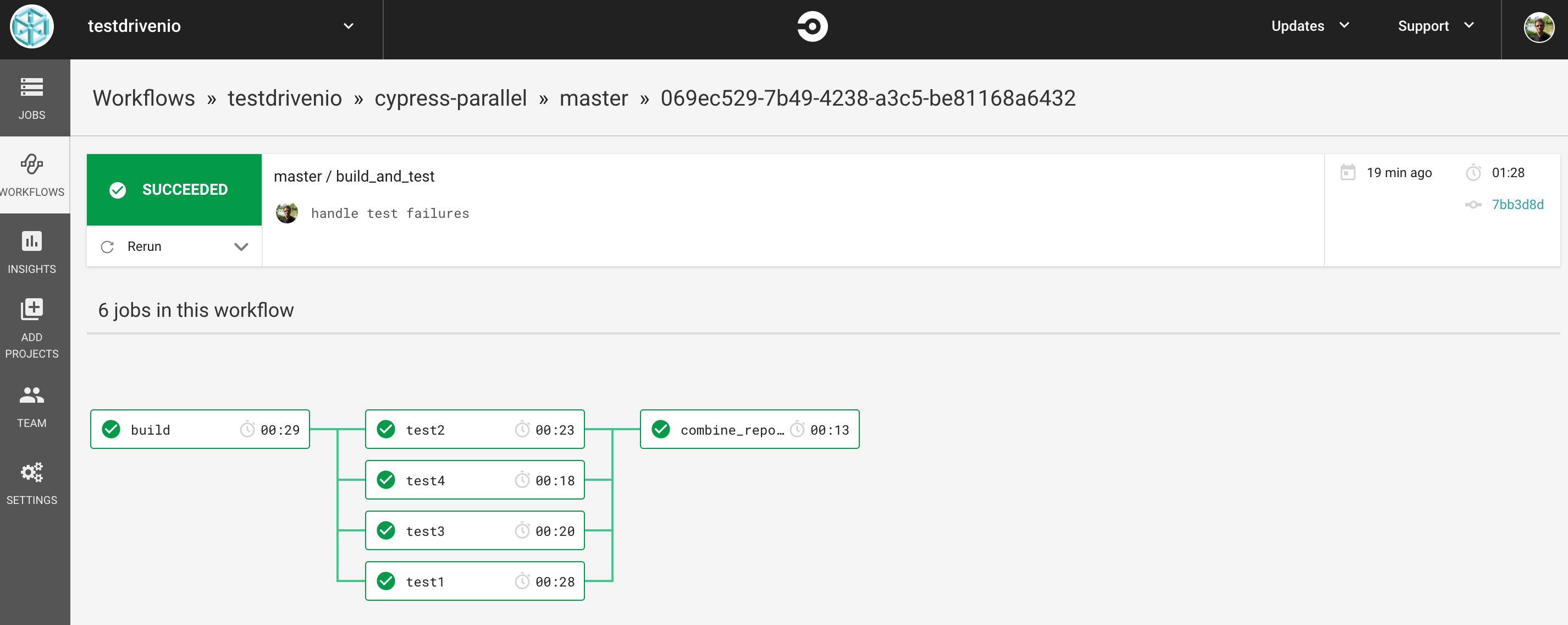

Unfortunately, this functionality is not currently supported by CircleCI. See this discussion for more info. Because we really only care about the mochawesome JSON report, you can get around this issue by suppressing the exit code for the test jobs. The test jobs will still run and generate the mochawesome report--they will just always pass regardless of whether the underlying tests pass or fail.

Update the following run again in createJSON:

run: {

name: `Running cypress tests ${index + 1}`,

command: `if $(npm bin)/cypress run --spec cypress/integration/${value} --reporter mochawesome --reporter-options "reportFilename=test${index + 1}"; then echo 'pass'; else echo 'fail'; fi`,

},

The single line bash if/else is a bit hard to read. Refactor this on your own.

Does it work? Generate the new config file, commit, and push your code. All test jobs should pass and the final mochawesome report should show the failing spec.

One last thing: We should probably still fail the entire build if a job fails. The quickest way to implement this is within the shell.exec callback in combine.js:

shell.exec(`./node_modules/.bin/marge ${uuid}.json --reportDir mochareports --reportTitle ${uuid}`, (code, stdout, stderr) => {

if (stderr) {

console.log(stderr);

} else {

console.log('Success!');

if (data.totalFailures > 0) {

process.exit(1);

} else {

process.exit(0);

}

}

});

Test this out. Then, try testing a few other scenarios, like skipping a test or adding more than four spec files.

Conclusion

This tutorial looked at how to run Cypress tests in parallel, without using the Cypress record feature, on CircleCI. It's worth noting that you can implement the exact same workflow with any of the CI services that offer parallelism--like GitLab CI, Travis, and Semaphore, to name a few--as well as your own custom CI platform with Jenkins or Concourse. If your CI service does not offer parallelism, then you can use Docker to run jobs in parallel. Contact us for more details on this.

Looking for some challenges?

- Create a Slack bot that notifies a channel when the tests are done running and adds a link to the mochawesome report as well as any screenshots or videos of failed test specs

- Upload the final report to an S3 bucket (see cypress-mochawesome-s3)

- Track the number of failed tests over time by storing the test results in a database

- Run the entire test suite multiple times as a nightly job and then only indicate whether or not a test has failed if it fails X number of times--this will help surface flaky tests and eliminate unnecessary developer intervention

Grab the final code from the cypress-parallel repo. Cheers!

Michael Herman

Michael Herman