In this tutorial, we'll detail how to deploy self-hosted GitLab CI/CD runners to DigitalOcean with Docker.

Contents

GitLab CI/CD

GitLab CI/CD is a continuous integration and delivery (CI/CD) solution, fully integrated with GitLab. Jobs from a GitLab CI/CD pipeline are run on processes called runners. You can either use GitLab-hosted shared runners or run your own self-hosted runners on your own infrastructure.

Scope

Runners can be made available to all projects and groups in a GitLab instance, to specific groups, or to specific projects (repositories). We'll use the first approach so that we can process jobs from multiple repositories with the same runner.

You can also use tags to control which jobs a runner can run.

For more on a runner's scope, review The scope of runners from the official docs.

Docker

Since you'll probably want to run docker commands in your jobs -- to build and test applications running inside Docker containers -- you need to pick one of the following three methods:

We'll use the socket binding approach (Docker-out-of-Docker?) to bind-mount the Docker socket to a container with a volume. The container running the GitLab runner will then be able to communicate with the Docker daemon and thus spawn sibling containers.

While I don't have any strong opinions about any of these approaches, I do recommend reading Using Docker-in-Docker for your CI or testing environment? Think twice. by Jérôme Petazzoni, the creator of Docker-in-Docker.

If you're curious about the Docker-in-Docker method, check out Custom Gitlab CI/CD Runner, Cached for Speed with Docker-in-Docker.

DigitalOcean Setup

First, sign up for a DigitalOcean account if you don't already have one, and then generate an access token so you can access the DigitalOcean API.

Add the token to your environment:

$ export DIGITAL_OCEAN_ACCESS_TOKEN=[your_digital_ocean_token]

Install Docker Machine if you don't already have it on your local machine.

Spin up a single droplet called runner-node:

$ docker-machine create \

--driver digitalocean \

--digitalocean-access-token $DIGITAL_OCEAN_ACCESS_TOKEN \

--digitalocean-region "nyc1" \

--digitalocean-image "debian-10-x64" \

--digitalocean-size "s-4vcpu-8gb" \

--engine-install-url "https://releases.rancher.com/install-docker/19.03.9.sh" \

runner-node;

Docker Deployment

SSH into the droplet:

$ docker-machine ssh runner-node

Create the following files and folders:

├── config

│ └── config.toml

└── docker-compose.yml

Add the following to the docker-compose.yml file:

version: '3'

services:

gitlab-runner-container:

image: gitlab/gitlab-runner:v14.3.2

container_name: gitlab-runner-container

restart: always

volumes:

- ./config/:/etc/gitlab-runner/

- /var/run/docker.sock:/var/run/docker.sock

Here, we:

- Used the official GitLab Runner Docker image.

- Added volumes for the Docker socket and "config" folder.

- Exposed port 9252 to the Docker host. More on this shortly.

Follow the official installation guide to download and install Docker Compose on the droplet, and then spin up the container:

$ docker-compose up -d

If you run into issues with Docker Compose hanging, review this Stack Overflow question.

Next, you'll need to obtain a registration token and URL. Within your group's "CI/CD Settings", expand the "Runners" section. Be sure to disable the shared runners as well.

Run the following command to register a new runner, making sure to replace <YOUR-GITLAB-REGISTRATION-TOKEN> and <YOUR-GITLAB-URL> with your group's registration token and URL:

$ docker-compose exec gitlab-runner-container \

gitlab-runner register \

--non-interactive \

--url <YOUR-GITLAB-URL> \

--registration-token <YOUR-GITLAB-REGISTRATION-TOKEN> \

--executor docker \

--description "Sample Runner 1" \

--docker-image "docker:stable" \

--docker-volumes /var/run/docker.sock:/var/run/docker.sock

You should see something similar to:

Runtime platform

arch=amd64 os=linux pid=18 revision=e0218c92 version=14.3.2

Running in system-mode.

Registering runner... succeeded

runner=hvdSfcc1

Runner registered successfully. Feel free to start it, but if it's running already

the config should be automatically reloaded!

Again, we used the docker socket binding method so that docker commands can run inside the jobs that run on the runner.

Review GitLab Runner commands to learn more about the

registercommand along with additional commands for registering and managing runners.

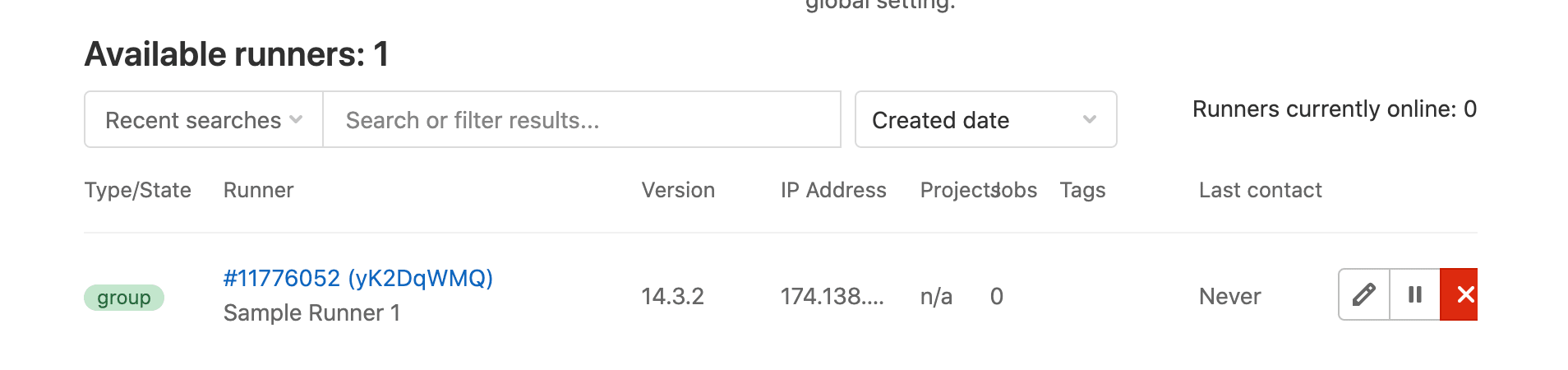

Back on GitLab, you should see the registered runner in your group's "CI/CD Settings":

Test it out by running the CI/CD pipeline for one of your repositories.

Back in your terminal, take a look at the container logs:

$ docker logs gitlab-runner-container -f

You should see the status of the job:

Checking for jobs... received

job=1721313345 repo_url=https://gitlab.com/testdriven/testing-gitlab-ci.git runner=yK2DqWMQ

Job succeeded

duration_s=32.174537956 job=1721313345 project=30721568 runner=yK2DqWMQ

Configuration

Take note of the config file, config/config.toml:

$ cat config/config.toml

concurrent = 1

check_interval = 0

[session_server]

session_timeout = 1800

[[runners]]

name = "Sample Runner 1"

url = "https://gitlab.com/"

token = "yK2DqWMQB1CqPsRx6gwn"

executor = "docker"

[runners.custom_build_dir]

[runners.cache]

[runners.cache.s3]

[runners.cache.gcs]

[runners.cache.azure]

[runners.docker]

tls_verify = false

image = "docker:stable"

privileged = false

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/var/run/docker.sock:/var/run/docker.sock", "/cache"]

shm_size = 0

Take a look at Advanced configuration to learn more about the available options. You can configure a number of things like logging and caching options, memory limits, and number of CPUs, to name a few.

Since we're not leveraging an external cache, like Amazon S3 or Google Cloud Storage, remove the [runners.cache] section. Then, restart the runner:

$ docker-compose exec gitlab-runner-container gitlab-runner restart

Try running two jobs at once. Since concurrency is set to 1 -- concurrent = 1 -- only one job can run at a time on the runner. So, one of the jobs will stay in "pending" state until the first job finishes running. If you're just setting up the runners for a small team, you may be able to get away with allowing only a single job to run at a time. As the team grows, you'll want to experiment with the concurrency config options:

concurrent- limits how many jobs can run concurrently globally, across all runners.limit- applies to individual runners, limiting the number of jobs that can be handled concurrently. Default is0, which means do not apply a limit.request_concurrency- applies to individual runners, limiting the number of concurrent requests for new jobs. Default is1.

Before we update the concurrency options, add a new runner:

$ docker-compose exec gitlab-runner-container \

gitlab-runner register \

--non-interactive \

--url <YOUR-GITLAB-URL> \

--registration-token <YOUR-GITLAB-REGISTRATION-TOKEN> \

--executor docker \

--description "Sample Runner 2" \

--docker-image "docker:stable" \

--docker-volumes /var/run/docker.sock:/var/run/docker.sock

Then, update config/config.toml like so:

concurrent = 4 # NEW

check_interval = 0

[session_server]

session_timeout = 1800

[[runners]]

name = "Sample Runner 1"

url = "https://gitlab.com/"

token = "yK2DqWMQB1CqPsRx6gwn"

executor = "docker"

limit = 2 # NEW

request_concurrency = 2 # NEW

[runners.custom_build_dir]

[runners.docker]

tls_verify = false

image = "docker:stable"

privileged = false

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/var/run/docker.sock:/var/run/docker.sock", "/cache"]

shm_size = 0

[[runners]]

name = "Sample Runner 2"

url = "https://gitlab.com/"

token = "qi-b3gFzVaX3jRRskJbz"

limit = 2 # NEW

request_concurrency = 2 # NEW

executor = "docker"

[runners.custom_build_dir]

[runners.cache]

[runners.cache.s3]

[runners.cache.gcs]

[runners.cache.azure]

[runners.docker]

tls_verify = false

image = "docker:stable"

privileged = false

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/var/run/docker.sock:/var/run/docker.sock", "/cache"]

shm_size = 0

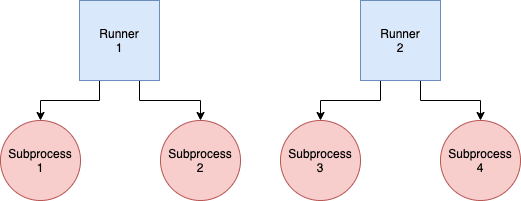

Now, we can run four jobs concurrently across two runners, with each runner having two subprocesses:

Restart:

$ docker-compose exec gitlab-runner-container gitlab-runner restart

Test it out by running four jobs.

Keep in mind that if you're building and testing Docker images, you'll eventually run out of disc space. So, it's a good idea to remove all unused images and containers periodically on the Docker host.

Example crontab:

@weekly /usr/bin/docker system prune -f

--

That's it!

Don't forget to unregister the runners:

$ docker-compose exec gitlab-runner-container gitlab-runner unregister --all-runners

Then, back on your local machine, bring down the Machine/droplet:

$ docker-machine rm runner-node

Michael Herman

Michael Herman