In this tutorial, we'll look at how to deploy a Django app to AWS Elastic Container Service (ECS) using AWS Copilot.

Prerequisites:

- Python v3.11 (or newer)

- Docker v24.0.6 (or newer)

- An AWS account

Contents

Objectives

- Explain what AWS Copilot is and look at its basic concepts.

- Deploy a Django application to AWS ECS using AWS Copilot CLI.

- Learn how AWS Copilot manages environmental variables and secrets.

- Spin up a PostgreSQL database using RDS and CloudFormation.

- Link a domain name to the web app and serve it over HTTPS.

- Set up a pipeline that deploys code from a GitHub repo.

What is AWS Copilot?

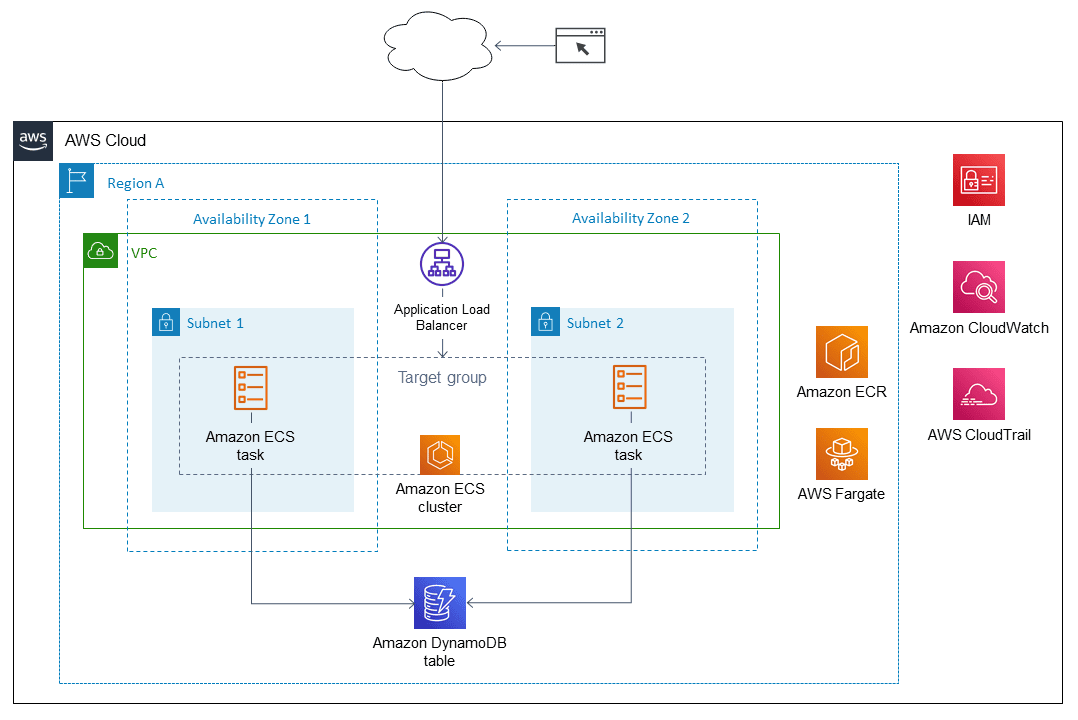

AWS Copilot CLI is an open-source toolkit that makes it easy for developers to build, release, and operate production-ready containerized apps. Under the hood, it utilizes many AWS services, including containers (ECS and ECR), databases (RDS), load balancers, file storage (S3), and CDNs (CloudFlare), to name a few.

The solution lets you focus on your app without considering the underlying infrastructure. Moreover, it aligns with AWS's best practices for networking, security, and scalability.

A typical AWS Copilot setup looks something like this:

The CLI comes at no extra charge. You only pay for the resources your app consumes.

Why AWS Copilot?

- Makes working with AWS a breeze.

- A well-thought-out tool with good documentation.

- Covers the deployment of most cloud architectures (web apps, static sites, jobs, etc.).

- Lets you extend its functionality using CloudFormation in the form of add-ons.

Why not AWS Copilot?

- Relatively long infrastructure provisioning/deployment times.

- At the time of writing, AWS Copilot is in the maintenance state. There are multiple ongoing discussions regarding its future development (#5987, #5925).

- Requires a solid understanding of the underlying AWS services, which makes it inappropriate for beginners.

- Vendor lock-in.

AWS Copilot Concepts

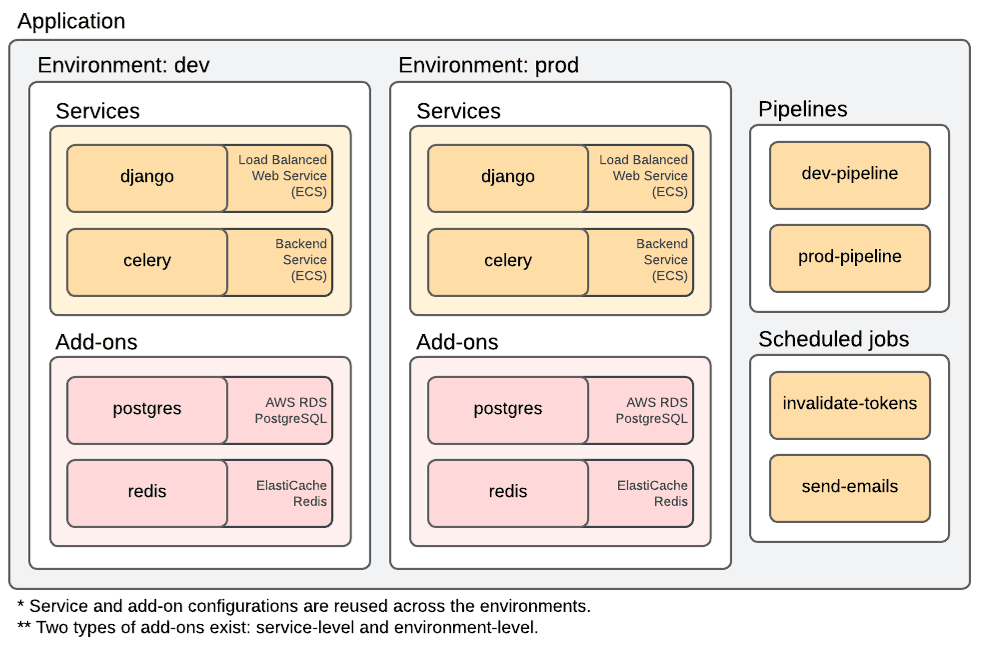

Before diving into the deployment process, let's familiarize ourselves with the essential Copilot concepts:

- Application is a collection of services, environments, and pipelines.

- Environment is a holder for services (SVCs). Each environment has a VPC, security groups, and subnets, among other things. A typical production-ready Copilot app has two environments:

devandprod. - Service (SVC) is any kind of app running on AWS, such as a load-balanced web service, a backend service, a static site, or a request-driven web service.

- Pipeline is used to automate the deployment process. You can configure a pipeline to automatically deploy your source code every time you push code to a VCS (GitHub, GitLab, CodeCommit, Bitbucket).

- Job can be leveraged for recurring tasks (fixed schedule or periodically).

The concepts can be visualized this way:

Project Setup

In this tutorial, we'll be deploying a simple image hosting app called django-images. To make things easier for us, I've already Dockerized the project following this great article.

To sum up, I've:

- Switched from SQLite to production-ready PostgreSQL database.

- Taken care of the environmental variables (and modified core/settings.py accordingly). For example, settings such as

SECRET_KEY,ALLOWED_HOSTS, and database settings are now loaded from the environment. - Froze the dependencies to requirements.txt and installed Gunicorn.

- Created Dockerfile.dev, Dockerfile.prod, and docker-compose.dev.yml files.

- Utilized entrypoint scripts to make the web service wait until the db service is ready.

If you're deploying your own project, use the above list as a checklist before moving on to the next step.

First, clone the base branch from the django-aws-copilot repo:

$ git clone https://github.com/duplxey/django-aws-copilot.git --branch base --single-branch

$ cd django-aws-copilot && rm -rf .git/

Next, build the images and spin up the containers:

$ docker compose -f docker-compose.dev.yml build

$ docker compose -f docker-compose.dev.yml up -d

The web app should be accessible at http://localhost:8000/. Ensure the app works by uploading an image.

Before moving forward, I suggest you quickly review the project's source code.

Install AWS Copilot CLI

Before installing AWS Copilot CLI, ensure you have AWS CLI setup and configured:

$ aws sts get-caller-identity

{

"UserId": "PUBBEGSA1UHYLOL30L5MK",

"Account": "079316456975",

"Arn": "arn:aws:iam::079316456975:user/duplxey"

}

If you get an unknown command error or your identity can't be fetched:

- Download AWS CLI from the official website.

- Navigate to your AWS Security Credentials page and create a new access key. Take note of your access key ID and secret access key.

- Run

aws configureand provide your access key credentials.

Next, install AWS Copilot CLI by following the official installation guide.

Verify the installation:

$ copilot --version

copilot version: v1.34.0

Deploy App

Create App

Start by creating a Copilot application:

$ copilot app init django-images

# copilot app init <app-name> --domain <your-domain-name>

If you have a domain name registered with Route53, attach

--domain <your-domain-name>at the end of the command. Later, I'll also explain how to attach domain names that are not managed by Route53.

This will set up the required Copilot IAM roles, create a KMS key for data encryption, create an S3 bucket for storing app configuration, and take care of the domain settings (if provided).

You'll notice a "copilot" directory was created in your project root. This directory will contain Copilot configuration files for your environments, SVCs, pipelines, and more.

Create Environment

Moving along, let's create the environment.

Run the following command:

$ copilot env init --name dev

# copilot env init --name <env-name>

Options:

- Credential source: Pick the desired profile

- Default environment configuration: Yes, use default

This command will generate a manifest file at copilot/environments/dev/manifest.yml.

It's a common practice to create two environments, one for development and testing and the other for production. For the sake of simplicity, we'll create just one called

dev.

Next, deploy the environment to AWS:

$ copilot env deploy --name dev

# copilot env deploy --name <env-name>

Copilot will generate a production-ready networking setup following AWS best practices. On top of that, it'll create a CloudFormation stack for additional resources and an ECS cluster for all your services.

To learn more about Copilot Environments, check out the official docs.

Database Setup

Unfortunately, Copilot, as of writing, natively supports only two types of databases:

- Aurora Serverless (SQL)

- DynamoDB (NoSQL)

While we could use Postgres-compatible Aurora, we'll opt for a custom Postgres RDS instance. The latter is cheaper and included in the AWS Free Tier.

However, if you wish to use Aurora, you can set it up running:

$ copilot storage initAfter you set up the database, an environmental variable called

MYCLUSTER_SECRETis injected into your workload. To connect to the database, you'll need to parse it, and modify settings.py accordingly.

To create a PostgreSQL instance we'll utilize a custom CloudFormation template.

Go ahead and create an "addons" directory within the "environments" directory. Inside "addons", create files named addons.parameters.yml and postgres.yml.

copilot/

├── environments/

│ ├── addons/

│ │ ├── addons.parameters.yml

│ │ └── postgres.yml

│ └── dev/

└── .workspace

Put the following contents into addons.parameters.yml:

# copilot/environments/addons/addons.parameters.yml

Parameters:

ShouldDeployPostgres: true

And the following into postgres.yml:

# copilot/environments/addons/postgres.yml

Parameters:

App:

Type: String

Description: Your application's name.

Env:

Type: String

Description: The environment name your service, job, or workflow is being deployed to.

ShouldDeployPostgres:

Type: String

Default: false

Description: Whether to deploy environment addons in this folder.

AllowedValues:

- true

- false

Conditions:

ShouldDeployPostgres: !Equals [!Ref ShouldDeployPostgres, 'true']

Resources:

DBSubnetGroup:

Condition: ShouldDeployPostgres

Metadata:

'aws:copilot:description': Get subnet group to place DB into

Type: AWS::RDS::DBSubnetGroup

Properties:

DBSubnetGroupDescription: Group of subnets to place DB into

SubnetIds: !Split [ ',', { 'Fn::ImportValue': !Sub '${App}-${Env}-PrivateSubnets' } ]

DatabaseSecurityGroup:

Condition: ShouldDeployPostgres

Metadata:

'aws:copilot:description': Add DB to VPC to be able to talk to others

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: DB Security Group

VpcId: { 'Fn::ImportValue': !Sub '${App}-${Env}-VpcId' }

DBIngress:

Condition: ShouldDeployPostgres

Metadata:

'aws:copilot:description': Allow ingress from containers in my application to the DB cluster

Type: AWS::EC2::SecurityGroupIngress

Properties:

Description: Ingress from Fargate containers

GroupId: !Ref 'DatabaseSecurityGroup'

IpProtocol: tcp

FromPort: 5432

ToPort: 5432

SourceSecurityGroupId: { 'Fn::ImportValue': !Sub '${App}-${Env}-EnvironmentSecurityGroup' }

DBInstance:

Condition: ShouldDeployPostgres

Metadata:

'aws:copilot:description': Create the actual DB cluster

Type: AWS::RDS::DBInstance

Properties:

Engine: postgres

EngineVersion: 16.4

DBInstanceClass: db.t3.micro

AllocatedStorage: 20

StorageType: gp2

MultiAZ: false

AllowMajorVersionUpgrade: false

AutoMinorVersionUpgrade: true

DeletionProtection: false

BackupRetentionPeriod: 7

EnablePerformanceInsights : true

DBName: postgres

MasterUsername: postgres

MasterUserPassword: !Sub '{{resolve:ssm-secure:/copilot/${App}/${Env}/secrets/SQL_PASSWORD:1}}'

DBSubnetGroupName: !Ref 'DBSubnetGroup'

VPCSecurityGroups:

- !Ref 'DatabaseSecurityGroup'

DBNameParam:

Condition: ShouldDeployPostgres

Metadata:

'aws:copilot:description': Store the DB name in SSM so that other services can retrieve it

Type: AWS::SSM::Parameter

Properties:

Name: !Sub '/copilot/${App}/${Env}/secrets/SQL_DATABASE'

Type: String

Value: !GetAtt 'DBInstance.DBName'

DBEndpointAddressParam:

Condition: ShouldDeployPostgres

Metadata:

'aws:copilot:description': Store the DB endpoint in SSM so that other services can retrieve it

Type: AWS::SSM::Parameter

Properties:

Name: !Sub '/copilot/${App}/${Env}/secrets/SQL_HOST'

Type: String

Value: !GetAtt 'DBInstance.Endpoint.Address'

DBPortParam:

Condition: ShouldDeployPostgres

Metadata:

'aws:copilot:description': Store the DB port in SSM so that other services can retrieve it

Type: AWS::SSM::Parameter

Properties:

Name: !Sub '/copilot/${App}/${Env}/secrets/SQL_PORT'

Type: String

Value: !GetAtt 'DBInstance.Endpoint.Port'

Outputs:

DatabaseSecurityGroup:

Condition: ShouldDeployPostgres

Description: Security group for DB

Value: !Ref 'DatabaseSecurityGroup'

This CloudFormation template aligns with the Copilot addons template. It creates a database instance in the private subnet and modifies the VPC so that your services can communicate with the database. Additionally, it creates the following parameters in AWS Systems Manager (SSM):

SQL_DATABASE

SQL_HOST

SQL_PORT

For the template to work, we need to create two secrets: SQL_USER and SQL_PASSWORD.

The easiest way to add these two secrets is by running:

$ copilot secret init --name SQL_USER # postgres

$ copilot secret init --name SQL_PASSWORD # complexpassword123

Alternatively, you can manually add the secrets by navigating to the SSM Parameter Store dashboard. Remember to tag the secrets with

copilot-application: <app-name>andcopilot-environment: <env-name>.

Lastly, redeploy the environment:

$ copilot env deploy --name dev

Copilot will take a few minutes to provision a new database instance. Feel free to go grab a cup of coffee in the meantime.

Once completed, you should see the database in your RDS dashboard.

Create Service

Our app and environment are now ready for Django service deployment.

Navigate to your project root and create a service named web:

$ copilot svc init --name web

# copilot svc init --name <svc-name>

Options:

- Service type: Load Balanced Web Service

- Dockerfile: app/Dockerfile.prod

- Port: 8000

Copilot will generate an SVC manifest file located in copilot/web/manifest.yaml. In the following few sections, we'll slightly modify it before we deploy the service to ECS.

Domain Alias

This step only applies if you've provided a Route53 domain name when initializing the Copilot application. If not, skip this section.

To add a domain alias, modify http.alias like so:

# copilot/web/manifest.yaml

http:

# Use Route53 domain

alias: ['mywebapp.click'] # replace me

Alias can also be a subdomain (e.g., django.mywebapp.click) and you can add multiple aliases.

Health Check

For ECS to consider a service healthy, it requires a 2xx status code. However, Django returns an error by default if the database migrations haven't been applied. To prevent deployment failures, we'll create a health check endpoint that always returns a 200 status code.

Create a new file named middleware.py in the "core" directory:

# app/core/middleware.py

from django.http import HttpResponse

from django.utils.deprecation import MiddlewareMixin

class HealthCheckMiddleware(MiddlewareMixin):

def process_request(self, request):

if request.META["PATH_INFO"] == "/ping/":

return HttpResponse("pong")

Keep the blank line at the end of middleware.py otherwise Flake8 will fail.

Next, add the just-created middleware at the top of the MIDDLEWARE list in settings.py:

# app/core/settings.py

MIDDLEWARE = [

"core.middleware.HealthCheckMiddleware",

# ...

]

Wait for the development server to restart and navigate to http://localhost:8000/ping/. The server should return pong.

Finally, modify the SVC manifest to use the health check:

# copilot/web/manifest.yaml

http:

# Health check settings

healthcheck:

protocol: 'http'

path: '/ping/'

port: 8000

success_codes: '200-299'

healthy_threshold: 2

interval: 60s

timeout: 10s

grace_period: 60s

Environmental Variables & Secrets

Instead of cluttering SSM with all the environmental variables, we'll create an additional environment file. This file shouldn't contain anything confidential as it will be committed to the VCS.

Create an .aws.env file in the "app" directory with the following contents:

# app/.aws.env

DEBUG=1

SECRET_KEY=acomplexsecretkey

ALLOWED_HOSTS=localhost 127.0.0.1 [::1] .amazonaws.com

CSRF_TRUSTED_ORIGINS=http://localhost http://127.0.0.1 http://*.amazonaws.com https://*.amazonaws.com

SQL_ENGINE=django.db.backends.postgresql

# SQL_DATABASE=<provided by copilot>

# SQL_USER=<provided by copilot>

# SQL_PASSWORD=<provided by copilot>

# SQL_HOST=<provided by copilot>

# SQL_PORT=<provided by copilot>

If you're planning to use a custom domain name, ensure to add it to

ALLOWED_HOSTSandCSRF_TRUSTED_ORIGINS.

Next, modify the manifest.yaml like so:

# copilot/web/manifest.yaml

# Environmental variables

variables:

COMMAND: gunicorn core.wsgi:application --bind 0.0.0.0:8000 --access-logfile - --error-logfile -

PORT: 8000

env_file: app/.aws.env

# Secrets from AWS Systems Manager (SSM) Parameter Store.

secrets:

SQL_DATABASE: /copilot/${COPILOT_APPLICATION_NAME}/${COPILOT_ENVIRONMENT_NAME}/secrets/SQL_DATABASE

SQL_USER: /copilot/${COPILOT_APPLICATION_NAME}/${COPILOT_ENVIRONMENT_NAME}/secrets/SQL_USER

SQL_PASSWORD: /copilot/${COPILOT_APPLICATION_NAME}/${COPILOT_ENVIRONMENT_NAME}/secrets/SQL_PASSWORD

SQL_HOST: /copilot/${COPILOT_APPLICATION_NAME}/${COPILOT_ENVIRONMENT_NAME}/secrets/SQL_HOST

SQL_PORT: /copilot/${COPILOT_APPLICATION_NAME}/${COPILOT_ENVIRONMENT_NAME}/secrets/SQL_PORT

Great, the service is now ready to be deployed!

Deploy Service

To deploy the service, run:

$ copilot svc deploy --name web

# copilot svc deploy --name <svc-name>

Wait for the deployment to complete, then check if you can reach the web service using your favorite web browser.

You'll most likely get a migration error but don't worry. We'll fix it in the next step!

Migrate Database & Collect Static Files

To migrate the database and collect static files, run the following two commands:

$ copilot svc exec --app django-images --name web --env dev --command "python manage.py migrate"

$ copilot svc exec --app django-images --name web --env dev --command "python manage.py collectstatic --noinput"

# copilot svc exec --app <app-name> --name <svc-name> --env <env-name> --command "<command>"

Your app should be fully working now! Test it by uploading an image.

SSH into SVC

To SSH into your services, you'll need AWS Session Manager. If you don't have it yet, go ahead and install it.

SSH into the web service by running the following:

$ copilot svc exec --app django-images --name web --env dev

# copilot svc exec --app <app-name> --name <svc-name> --env <env-name>

To terminate the SSH connection, run exit.

To learn more about Copilot Services check out the official docs.

CI/CD

In this section, we'll set up a pipeline that automatically deploys source code from GitHub.

Create Repo

First, create a new repository on GitHub.

Then, navigate to your project root and run the following commands:

$ git init

$ git add .

$ git commit -m "project init"

4 git branch -M main

$ git remote add origin <your-remote-origin>

$ git push -u origin main

These commands will initialize a new Git repository, VCS all the files, commit them, and push the commit to the remote origin.

Ensure the files have been committed by navigating to your GitHub repo.

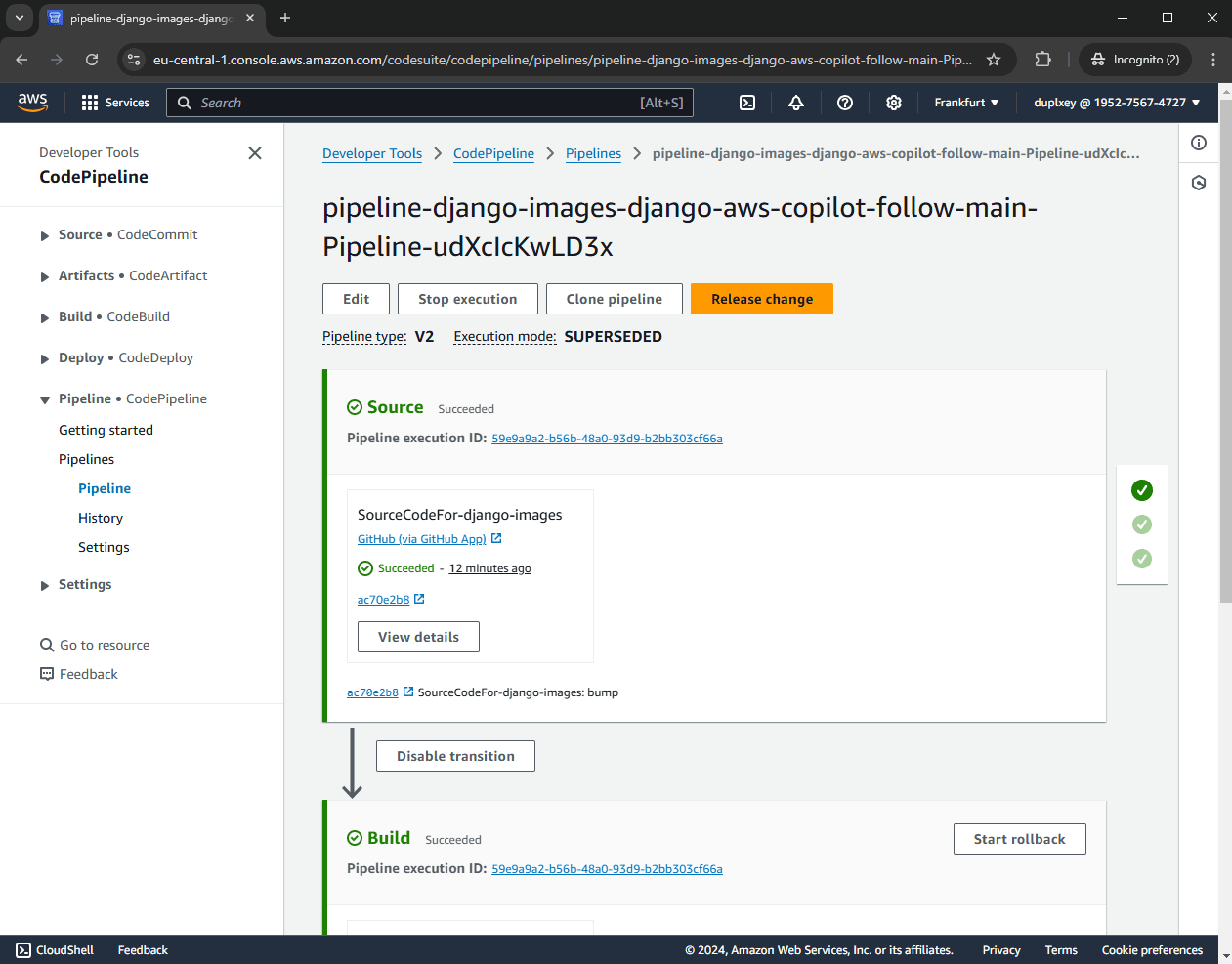

Create Pipeline

Moving along, create a Copilot pipeline:

$ copilot pipeline init

Options:

- Pipeline name: django-aws-copilot-follow-main

- What type of continuous delivery pipeline is this: Workloads

- 1st stage: dev

You'll notice a "pipelines" directory was created within your "copilot" directory. For each pipeline, a new directory is created within that directory, which contains a manifest.yml file and a buildspec.yml file.

copilot/

├── environments/

│ ├── addons/

│ │ ├── addons.parameters.yml

│ │ └── postgres.yml

│ └── dev/

│ └── manifest.yml

├── pipelines/

│ └── django-aws-copilot-main/

│ ├── buildspec.yml

│ └── manifest.yml

├── web/

│ └── manifest.yml

└── .workspace

You can use these two files to customize the pipeline behavior further. For example, run tests, request approval before deploying, et cetera.

Next, add and commit the just-created files:

$ git add .

$ git commit -m "added pipeline"

$ git push origin main

Finally, deploy the pipeline:

$ copilot pipeline deploy

If you get a warning saying ACTION REQUIRED! navigate to your AWS Pipelines dashboard, select the pipeline, and click "Update pending connection". Select "Install new app" to install the app to your GitHub repo.

Once you install the app the pipeline status should change to "Connected".

Then, navigate to your pipeline info page and wait for the pipeline execution to complete.

If your pipeline fails, ensure:

- None of your yaml config files contain Windows backslash

\for relative paths.- Both entry point scripts have the execute permission:

$ git update-index --chmod=+x .\app\entrypoint.dev.sh $ git update-index --chmod=+x .\app\entrypoint.prod.sh

Great, that's it!

From now on, every time you push your code to GitHub, a new pipeline will run.

To learn more about Copilot Pipelines, check out the official documentation.

Custom Domain

Skip this step if you've already configured a domain name when initiaizing your Copilot application. Also, to follow along you'll need to own a domain name and have access to the domain's DNS settings.

Using a domain name that isn't managed by AWS Route 53 is a bit more tricky.

Here, you'll have to manually request a public ACM certificate, verify it, and delegate your domain's routing from your DNS server to your Copilot's application load balancer (ALB).

First, navigate to the AWS Certificate Manager and click "Request a certificate":

- Certificate type: Request a public certificate

- Fully qualified domain name: yourdomain.com and *.yourdomain.com

- Validation method: DNS validation

- Key algorithm: RSA 2048

Click "Request".

To verify the certificate navigate to your domain's registrar DNS settings and add the provided verification CNAME records, e.g.:

+--------+--------------------------+-------------------------------+-------+

| Type | Host | Value | TTL |

+--------+--------------------------+-------------------------------+-------+

| CNAME | _85ff....yourdomain.com | _0ja8....acm-validations.aws | Auto |

+--------+--------------------------+-------------------------------+-------+

| CNAME | _69ce....yourdomain.com | _0exD....acm-validations.aws | Auto |

+--------+--------------------------+-------------------------------+-------+

Wait a few minutes for AWS to verify the domain name and issue a certificate. Once verified, take note of the certificate's ARN.

It should look something like this:

arn:aws:acm:eu-central-1:938748293:certificate/758effe9-xedd-4ce7-abb0-c35ab3b7b697

Next, navigate to your EC2 dashboard, select your Copilot load balancer, and take note of the "DNS name".

Go back to your registar's DNS settings and add the following two records:

+--------+--------------------------+-------------------------------+-------+

| Type | Host | Value | TTL |

+--------+--------------------------+-------------------------------+-------+

| CNAME | @ | django-Publi-hAbWrIU...com | Auto |

+--------+--------------------------+-------------------------------+-------+

| CNAME | * | django-Publi-hAbWrIU...com | Auto |

+--------+--------------------------+-------------------------------+-------+

Then attach the certificate to the environment copilot/environments/addons/dev.yaml:

# copilot/environments/addons/dev.yaml

http:

public:

certificates:

- arn:aws:acm:eu-central-1:938748293:certificate/758effe9-abb0-c35ab3b7b697

And add the alias to the SVC:

copilot/web/manifest.yaml

http:

alias: ['mywebapp.click']

Deploy the environment and the service once again:

$ copilot env deploy --name dev

$ copilot svc deploy --name web --env dev

Yay! Your web app should now be accessible at your custom domain. On top of that, it should be served over HTTPS with a valid SSL certificate.

Conclusion

In this tutorial, we walked you through the process of deploying a Django app to AWS using AWS Copilot CLI. By now, you should have a fair understanding of Copilot and be able to deploy your own Django apps.

The final source code is available on GitHub.

Next steps:

- Upgrade your task definition specs (in copilot/web/manifest.yaml).

- Bump your Postgres database specs (in copilot/environments/addons/postgres.yaml).

- Set up AWS S3 to persist Django static and media files between service restarts.

To remove all the AWS resources we've created throughout the tutorial:

- Run

copilot app delete --name django-images. - Go to your CloudFormation dashboard and remove all the stacks.

- Manually remove any remaining resources.

To list your AWS resources, you can use AWS Tag Editor or AWS Resource Explorer.

Nik Tomazic

Nik Tomazic