AI has become an important part of our development processes. As with all tools, you need to choose the right one for your development workflow. So, in this article, we'll look at leveraging two AI tools designed for software development, Claude and Cursor, and compare and contrast them for Django web development.

While Claude is an AI model that can be easily integrated into PyCharm, Cursor is an AI editor built on top of VSCode.

Process

To make the comparison as fair as possible, I used the same prompts for both tools. Since they both required some improvements after the first prompt, the subsequent prompts were different because they produced different issues.

None of the prompts I provided were very technical; I didn't specify models, endpoints, or anything similar since I wanted to compare how well they do creating an entire web application from scratch. When solving the problems they created, I stayed as far away from a knowledgeable user as possible -- providing problems but not solutions.

The first prompt, whose purpose was to create the whole application, was detailed and well explained in terms of requirements but not implementation. I told them what I expected from the app, which technologies to use, and that they should follow best practices. The app they were asked to create was a party organizer application, the same as in my HTMX course.

The requirements were the following:

- The app should manage three resources:

- Party (date, time, location, invitation, organizer)

- Gifts (linked to a specific party, with name, price, and link)

- Guests (linked to a specific party, with name and attending status)

- Users should be able to create new parties or edit existing ones. The party organizer should also be able to add, edit, and delete gifts, as well as update guest attendance statuses.

The next prompts, intended to see how they handle changes such as adding tests or new functionalities, were also provided without much detail -- e.g., "Add tests that cover important parts of the app using pytest".

Initial App

Claude

I added Claude to PyCharm so I could use it directly in the terminal. It's well integrated, and I liked that it allowed me to see changes in a comparison window. If you're used to JetBrains' products, you'll probably like it.

It creates a TODO list, so you know what to expect. You can opt to accept each change separately, but I accepted every change individually to avoid missing anything. It created a superuser, ran the app, and showed how to open the app and admin interface.

I noticed it was too agreeable. If I stated something, it didn't check if it was correct -- it just did what I said.

Creating the first version of the app took 9 minutes. It would have been even faster if I had opted out of accepting each change separately.

It took an additional 6 prompts and 25 minutes to get the app working properly.

Almost all errors were fixed on the first try, just by copying the error to Claude and asking for a fix. Only one error took two tries to fix.

Some of the fixes felt like quick “first try” solutions. For example, clearing the form after submission was handled with a tiny JavaScript snippet. It worked, but with Django + HTMX there are cleaner options, like returning the form in the response or using an out-of-band swap.

The design with Claude was okay out of the box.

Claude made the app mobile-friendly without prompting. The app looked mostly fine on mobile, with only minor issues.

Cursor

Cursor has its own IDE based on VSCode. If you're used to VSCode, you'll probably like it. In my opinion, Cursor is better suited for an all-AI workflow where you type prompts to get what you want, while Claude is more useful as a helper when you're still in control.

The first version in Cursor took 10 minutes. Getting to a working version required 7 additional prompts and 27 minutes.

Even though the prompt didn't require party deletion, Cursor added that functionality on its own.

Unlike Claude, login/logout functionality was not added automatically; I had to provide a separate prompt.

Cursor did almost no design initially, but it was quickly improved with a single prompt.

There were some issues with Cursor placing imports inside functions instead of at the top of files.

Interestingly, Cursor also added a README file.

A large difference between Cursor and Claude was how they handled editing/creating new instances. While Claude created three Django ModelForms and handled them appropriately in function-based views, Cursor only created a ModelForm for the party model. Gift and guest creation/modification was handled in a class-based view that extended CreateView/UpdateView.

Tests

Claude

After adding tests, it tried to run them, but they didn't work and it started adding strange fixes. The problem was missing Django settings; unable to fix that, it began adding imports inside test functions. After a few weird fixes, I stopped it and instructed it to remove those fixes, which it did without issue.

At some point, I wrote my own test and prompted it to add similar tests. After that, Claude added 21 valid tests.

Cursor

In total, 7 tests were created. Tests were automatically placed in a separate folder and split into two files: test_parties.py and test_htmx.py. Tests for guests and gifts were added to test_htmx.py, while party-related tests were added to test_parties.py. It also created a pytest.ini file. While the tests could be improved and the split into the two files was a bit strange, they were sufficient.

Changing the App

Initially, all users could see the list of all parties. I prompted both tools to filter the list to only parties the user is the organizer of. Another change I requested was to make the color scheme more "elegant," without specifying exact colors.

Claude

- Limiting the party list to the organizer's parties was done without problems.

- Changing the color palette was done successfully but required more than one prompt to update the whole app.

Cursor

- Limiting the party list to the organizer's parties was done without problems. Tests were also updated without my prompt.

- Changing the color palette was done successfully but required more than one prompt to update the whole app.

Working with an Existing App

The first part of my comparison was done with extreme "vibe coding"; the app was created with as little guidance as possible. I also wanted to see how the AI would perform on existing code when given smaller, more focused tasks. For that, I used the app I built in the Full-stack Django with HTMX and Tailwind course, so they could follow my style.

I asked for two changes:

- Adding links from the list page to the detail page.

- Allowing any logged-in user to mark a gift as bought, but only the organizer can mark it as not bought.

The differences here were smaller.

Cursor

The simple task -- duplicating the links -- was quickly without any issues. The more complicated task -- expanding existing functionality -- required an additional prompt but was otherwise done well.

Cursor followed my code style (e.g., adding data-cy attributes), but it also added multiple tests, sometimes unnecessarily changing the structure. Many added tests initially failed due to mismatched expectations, blaming the cached Django app, which required multiple technical prompts to fix.

Claude

Again, duplicating links was done quickly without any issues. Expanding functionality was also done without issues and required no additional prompts. Unlike Cursor, it didn't add extra tests but added the data-cy attribute consistently.

Cursor vs Claude

Both tools I used were paid. The free version of Claude can't even be used with PyCharm. The free version of Cursor performs much worse: Achieving a similar result would require five times as many prompts and more coding knowledge.

Cursor has its own IDE and terminal, so there's no need to copy/paste errors -- it checks the terminal itself. It's better at fixing non-code-related errors, like DJANGO_SETTINGS_MODULE misconfigurations, and can run tests and migrations on its own.

Django has strict rules and a long history; HTMX is newer and less strict. Both tools had more issues with HTMX than Django.

| Category | Claude | Cursor |

|---|---|---|

| Time to first version of the app (min) | 9 | 10 |

| Time to working version of the app (min) | 25 | 27 |

| Number of additional prompts | 6 | 7 |

| Design | Out of the box | Additional prompt |

| Login/Logout | Out of the box | Additional prompt |

| Function/Class-based views | Leaning more toward FBV | Leaning more toward CBV |

| Tests | Didn't manage to create tests on its own; succeeded after a few examples | Split into multiple files; tests were done well |

| Number of Tests | 21 | 7 |

| Small Changes to the App | Same performance | Same performance |

| Changing the Existing App | Consistent with style, slightly better at adding new functionality | Consistent with style, needed an additional prompt for changes |

| Free Versions | Can't be used as a code editor | Can be used as an editor, but the generated code is worse |

From an end-user perspective, both AI-generated apps function similarly, with no significant differences in how they behave in the browser.

Code-wise, there are some minor differences. Cursor does more on its own (e.g., adding a README and new tests without prompts) and is better at fixing errors, making it useful for rapid prototypes. My impression is that it also tries to use more of what is available (e.g., UserPassesTestMixin, which I wasn't aware of before). However, those advantages are also disadvantages, since they make it less consistent, more error-prone, and harder to control.

Examples

If the two AI agents follow a specific code style (when adding to pre-existing app), their output is similar, but on a blank project, their approach can be quite different. Here you can see some of the similarities/differences they produced.

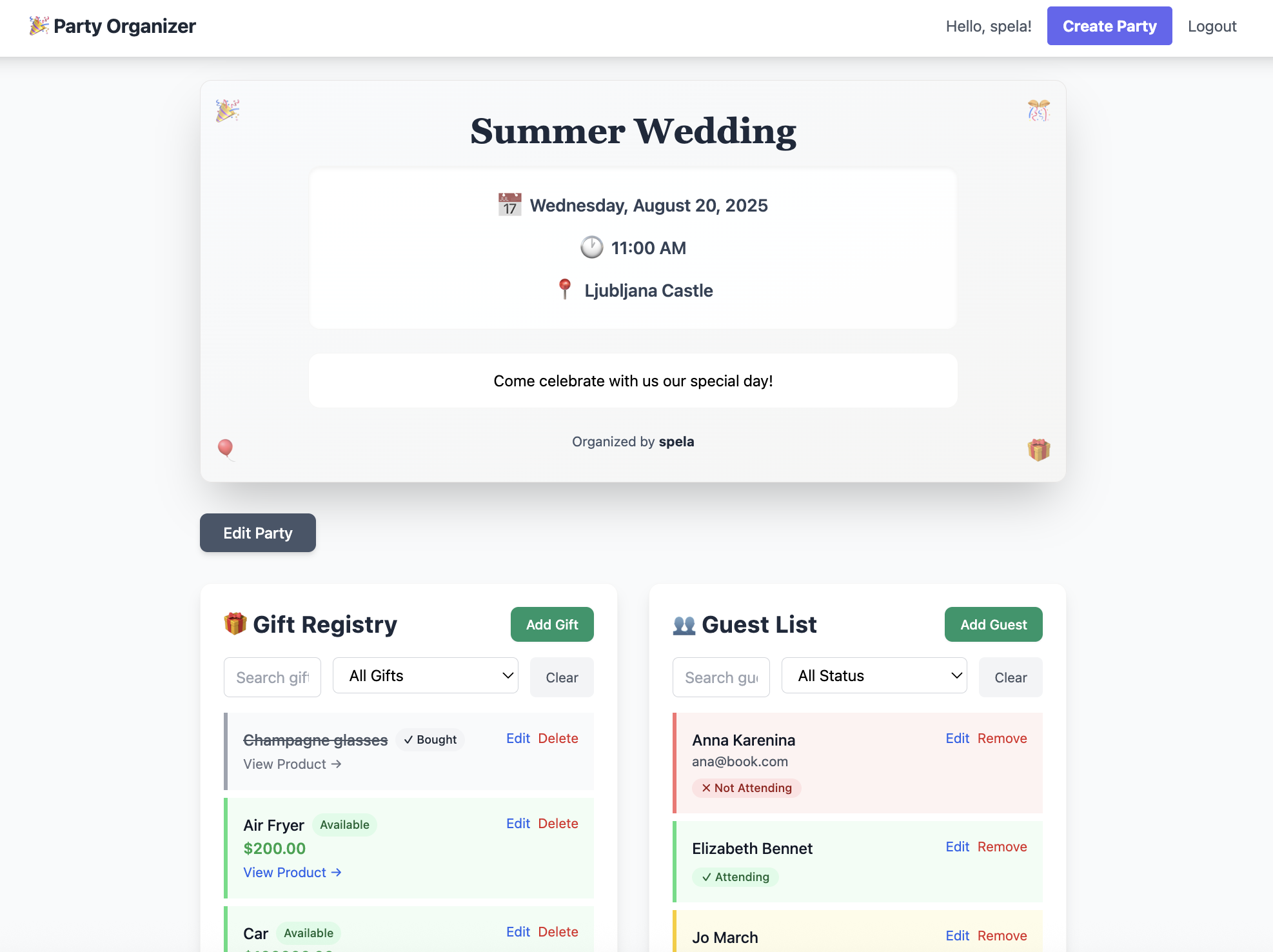

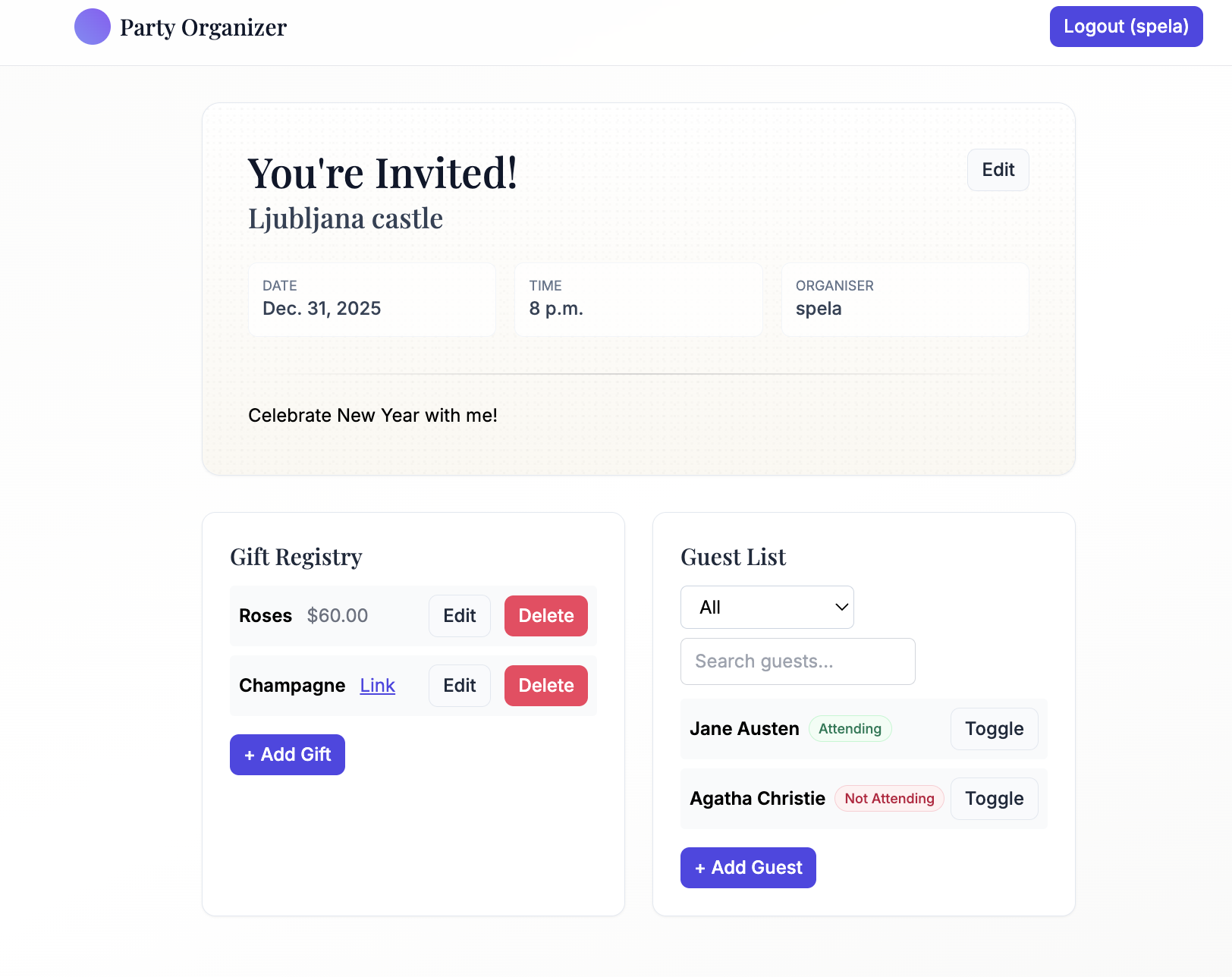

The design

Claude:

Cursor:

Despite making very little input regarding the design of the app, the look of the app is quite similar in both cases.

Endpoint added to existing project

Claude:

@login_required

@require_http_methods(["PUT"])

def mark_gift_not_bought_partial(request, gift_uuid):

gift = get_object_or_404(Gift, uuid=gift_uuid)

if request.user != gift.party.organizer:

return HttpResponse("Unauthorized", status=403)

gift.bought = False

gift.save()

return render(

request,

"party/gift_registry/partial_gift_detail.html",

{"gift": gift, "party": gift.party}

)

Cursor:

@require_http_methods(["POST"])

def mark_gift_unbought(request, gift_uuid):

"""Only the party organizer can mark a gift as not bought"""

gift = get_object_or_404(Gift, uuid=gift_uuid)

# Check if user is the party organizer

if request.user != gift.party.organizer:

return render(

request,

"party/gift_registry/partial_gift_detail.html",

{"gift": gift, "party": gift.party}

)

gift.bought = False

gift.save()

return render(

request, "party/gift_registry/partial_gift_detail.html",

{"gift": gift,

"party": gift.party}

)

While the endpoints are similar, they accept a different HTTP method. They also handle request.user != gift.party.organizer case a little differently. Claude added login_required on its own.

Add instance endpoint

Claude:

@login_required

def add_gift(request, pk):

party = get_object_or_404(Party, pk=pk)

if request.user != party.organizer:

return JsonResponse({"error": "Permission denied"}, status=403)

if request.method == "POST":

form = GiftForm(request.POST)

if form.is_valid():

gift = form.save(commit=False)

gift.party = party

gift.save()

if request.headers.get("HX-Request"):

context = {"gift": gift, "is_organizer": True}

response = render(request, "parties/gift_item.html", context)

response["HX-Trigger"] = "clear-form"

return response

return redirect("party_detail", pk=party.pk)

if request.headers.get("HX-Request"):

return render(

request, "parties/gift_form.html", {"form": form, "party": party}

)

messages.error(request, "Please correct the errors below.")

return redirect("party_detail", pk=party.pk)

# GET request - show form

form = GiftForm()

if request.headers.get("HX-Request"):

return render(request, "parties/gift_form.html", {"form": form, "party": party})

return redirect("party_detail", pk=party.pk)

Cursor:

class GiftCreateView(LoginRequiredMixin, UserPassesTestMixin, CreateView):

model = Gift

fields = ["name", "price", "link"]

template_name = "party_organizer/gift_form.html"

def get_context_data(self, **kwargs):

context = super().get_context_data(**kwargs)

context["party"] = get_object_or_404(Party, pk=self.kwargs["party_pk"])

return context

def form_valid(self, form):

party = get_object_or_404(Party, pk=self.kwargs["party_pk"])

form.instance.party = party

response = super().form_valid(form)

if self.request.headers.get("HX-Request"):

context = {"party": party, "user": self.request.user}

html = render_to_string(

"party_organizer/gift_list.html", context, request=self.request

)

return HttpResponse(html)

return response

def form_invalid(self, form):

party = get_object_or_404(Party, pk=self.kwargs["party_pk"])

if self.request.headers.get("HX-Request"):

html = render_to_string(

"party_organizer/gift_form.html",

{"form": form, "party": party},

request=self.request

)

return HttpResponse(html, status=400)

return super().form_invalid(form)

def test_func(self):

party = get_object_or_404(Party, pk=self.kwargs["party_pk"])

return self.request.user == party.organizer

def get_success_url(self):

return reverse_lazy(

"party_organizer:party_detail", kwargs={"pk": self.kwargs["party_pk"]}

)

The agents tackled the creation/update endpoints in very different ways. Claude's code was more consistent: It created three ModelForms and handled them in a function-based views. Cursors code was less consistent, but it used CBVs and took advantage of generic views and mixins.

Conclusion

Using both agents helped me understand which one I prefer, but someone else in the same situation might choose differently. The differences aren't huge, but one will likely feel more natural depending on your preferred IDE, coding style, and communication approach.

It's also important to note that, since I wanted a fair comparison, I didn't tweak the AI agents in any way. I didn't add specific instructions that could improve the prompts, and I wasn't very detailed in my requests.

While both tools significantly speed up development, knowing how to code is still as necessary as ever. You can create the first version of the app and fix initial errors without deep understanding by copying errors to AI, but tasks like adding tests still require knowledge. With Claude, I added tests manually; with Cursor, I had to make specific code fixes.

You shouldn't blindly trust AI-generated code, especially tests. Write the most important tests yourself and carefully review the code it produces. Split the tasks into smaller chunks, so you can better monitor the changes.

Letting AI create things independently can make navigation and code review exhausting. AI can speed up development, but recognizing bad code and security issues remains crucial.

Want to see a FastAPI flavor of this? Check out Cursor vs. Claude for FastAPI Development .

Špela Giacomelli (aka GirlLovesToCode)

Špela Giacomelli (aka GirlLovesToCode)